🔗 View in your browser. | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

DevOpsLinks

#DevOps #SRE #PlatformEngineering

📝 The Opening Call

FAUN.sensei() is finally live.

The first 6 courses are out, and this feels like the start of something big. To mark the launch, I'm giving every subscriber 25% off with the code SENSEI2525. The discounts is available for 1 week. Use it whenever you want, as many times as you need - just apply the code at the checkout.

The name matters to me. Sensei, Seonsaeng, or Xiansheng (先生) is an old honorific shared across Japanese, Korean, and Chinese cultures. It means “one who comes before' - someone who guides you because they've lived the journey already. That's the idea behind this platform.

FYI, this is the list of the available courses at the moment:

👉 End-to-End Kubernetes with Rancher, RKE2, K3s, Fleet, Longhorn, and NeuVector - The full journey from nothing to production

👉 Building with GitHub Copilot - From Autocomplete to Autonomous Agents

👉 Observability with Prometheus and Grafana - A Complete Hands-On Guide to Operational Clarity in Cloud-Native Systems

👉 DevSecOps in Practice - A Hands-On Guide to Operationalizing DevSecOps at Scale

👉 Cloud-Native Microservices With Kubernetes - 2nd Edition - A Comprehensive Guide to Building, Scaling, Deploying, Observing, and Managing Highly-Available Microservices in Kubernetes

👉 Cloud Native CI/CD with GitLab - From Commit to Production Ready

Thanks for being here at the start of this journey. More is coming!

— Aymen (@eon01), Founder of FAUN.dev()

The first 6 courses are out, and this feels like the start of something big. To mark the launch, I'm giving every subscriber 25% off with the code SENSEI2525. The discounts is available for 1 week. Use it whenever you want, as many times as you need - just apply the code at the checkout.

The name matters to me. Sensei, Seonsaeng, or Xiansheng (先生) is an old honorific shared across Japanese, Korean, and Chinese cultures. It means “one who comes before' - someone who guides you because they've lived the journey already. That's the idea behind this platform.

FYI, this is the list of the available courses at the moment:

👉 End-to-End Kubernetes with Rancher, RKE2, K3s, Fleet, Longhorn, and NeuVector - The full journey from nothing to production

👉 Building with GitHub Copilot - From Autocomplete to Autonomous Agents

👉 Observability with Prometheus and Grafana - A Complete Hands-On Guide to Operational Clarity in Cloud-Native Systems

👉 DevSecOps in Practice - A Hands-On Guide to Operationalizing DevSecOps at Scale

👉 Cloud-Native Microservices With Kubernetes - 2nd Edition - A Comprehensive Guide to Building, Scaling, Deploying, Observing, and Managing Highly-Available Microservices in Kubernetes

👉 Cloud Native CI/CD with GitLab - From Commit to Production Ready

Thanks for being here at the start of this journey. More is coming!

— Aymen (@eon01), Founder of FAUN.dev()

🔍 Inside this Issue

This one toggles between calm and chaos—FreeBSD’s deliberate engineering, petabyte logs tuned to sprint, cost landmines, and a worm with a dead-man’s switch. If you’re tightening spend, hardening supply chains, or chasing five nines, the threads connect—details inside.

💌 A Love Letter to FreeBSD

💸 AWS Optimizer Targets Unused NAT Gateways for Cost Savings

🛠 Collaborating with Terraform: How Teams Can Work Together Without Breaking Things

🐜 GitLab Uncovers Massive npm Attack - Developers on High Alert

🎬 How Netflix optimized its petabyte-scale logging system with

🛡 How when AWS was down, we were not

🔎 Researcher Scans 5.6M GitLab Repositories, Uncovers 17,000 Live Secrets and a Decade of Exposed Credentials

🌍 Self Hostable Multi-Location Uptime Monitoring

⚠️ The $1,000 AWS mistake

🤖 The AI Gold Rush Is Forcing Us to Relearn a Decade of DevOps Lessons

Fewer surprises, more uptime—go make something tougher than your cloud.

See you in the next issue!

FAUN.dev() Team

💌 A Love Letter to FreeBSD

💸 AWS Optimizer Targets Unused NAT Gateways for Cost Savings

🛠 Collaborating with Terraform: How Teams Can Work Together Without Breaking Things

🐜 GitLab Uncovers Massive npm Attack - Developers on High Alert

🎬 How Netflix optimized its petabyte-scale logging system with

🛡 How when AWS was down, we were not

🔎 Researcher Scans 5.6M GitLab Repositories, Uncovers 17,000 Live Secrets and a Decade of Exposed Credentials

🌍 Self Hostable Multi-Location Uptime Monitoring

⚠️ The $1,000 AWS mistake

🤖 The AI Gold Rush Is Forcing Us to Relearn a Decade of DevOps Lessons

Fewer surprises, more uptime—go make something tougher than your cloud.

See you in the next issue!

FAUN.dev() Team

ℹ️ News, Updates & Announcements

faun.dev

Luke Marshall pointed AWS Lambda, SQS, and TruffleHog at 5.6 million public GitLab repos. In 24 hours, the setup surfaced 17,000+ live secrets.

The pipeline handled retries, skipped dupes, and auto-notified 2,800+ orgs. Result? Over 400 GitLab tokens revoked - clean cut.

The pipeline handled retries, skipped dupes, and auto-notified 2,800+ orgs. Result? Over 400 GitLab tokens revoked - clean cut.

faun.dev

GitLab just flagged a sharp new npm supply chain attack built around a malware strain dubbed Shai-Hulud. It does more than slip bad code into packages - it hijacks developer machines, scrapes secrets from AWS, GCP, Azure, and GitHub, and pushes poisoned packages using stolen npm tokens.

The kicker? It packs a "dead man's switch" that nukes its tracks if command channels go dark. That's not just sneaky. It's scorched earth.

What can we learn: This isn’t your average typo-squatting bot. It's automated, it’s built for CI/CD, and it’s cracking open the idea that package registries can be trusted blindly. Time to rethink how teams validate and wall off dependencies - before the next worm turns.

The kicker? It packs a "dead man's switch" that nukes its tracks if command channels go dark. That's not just sneaky. It's scorched earth.

What can we learn: This isn’t your average typo-squatting bot. It's automated, it’s built for CI/CD, and it’s cracking open the idea that package registries can be trusted blindly. Time to rethink how teams validate and wall off dependencies - before the next worm turns.

faun.dev

AWS Compute Optimizer just got smarter. It now spots unused NAT Gateways by watching 32 days of CloudWatch traffic - and it doesn’t stop at low numbers. It checks the surrounding setup to avoid jumping the gun.

Recommendations come through the API (sorry, no GovCloud or China), and they now show up in the Cost Optimization Hub, giving a broader view of potential wins.

Recommendations come through the API (sorry, no GovCloud or China), and they now show up in the Cost Optimization Hub, giving a broader view of potential wins.

🔗 Stories, Tutorials & Articles

geocod.io

A missing VPC Gateway Endpoint sent EC2-to-S3 traffic through a NAT Gateway, lighting up over $1,000 in unnecessary data processing charges. All that for in-region traffic hitting an AWS service.

Why? AWS defaulted the route to the NAT Gateway. It only takes the free S3 Gateway Endpoint if you tell it to.

The lesson: Cloud networks aren’t simple. If you don’t shape the traffic, AWS will - expensively.

Why? AWS defaulted the route to the NAT Gateway. It only takes the free S3 Gateway Endpoint if you tell it to.

The lesson: Cloud networks aren’t simple. If you don’t shape the traffic, AWS will - expensively.

hackerstack.org

This post outlines the various Terraform project files and their purposes, such as vars.tf for default variable declarations, terraform.tfvars for overriding default variable values, terraform.tf for tfstate backends and provider declarations, version.tf for Terraform version constraints, and .terraform.lock.hcl for dependency snapshots. Additional files in a Terraform project, like network.tf or database.tf, contain modules or provider resources for managing infrastructure. The post also includes examples of variables, tf files for Azure and Google Cloud, version constraints, and running Terraform commands like init, plan, apply, state manipulation, force-unlock, and taint.

tara.sh

A Linux user takes FreeBSD for a spin - and comes away impressed. What stands out? Clean, deliberate engineering. Boot environments make updates stress-free. The new pkgbase system adds modularity without chaos. And the OS treats uptime not just as a metric, but as a design goal.

The essay makes a solid case: decouple fast-moving desktops from server-grade stability. Tie OS evolution to hardware lifecycles that don’t panic every 6 months.

The essay makes a solid case: decouple fast-moving desktops from server-grade stability. Tie OS evolution to hardware lifecycles that don’t panic every 6 months.

devopsdigest.com

Sauce Labs just dropped a reality check: 95% of orgs have fumbled AI projects. The kicker? 82% don’t have the QA talent or tools to keep things from breaking. Even worse, 61% of leaders don’t get software testing 101, leaving AI pipelines full of holes - cultural, procedural, and otherwise.

System shift: Agentic AI is crashing headfirst into old-school DevOps. Speed’s winning. Quality’s bleeding.

System shift: Agentic AI is crashing headfirst into old-school DevOps. Speed’s winning. Quality’s bleeding.

clickhouse.com

Netflix overhauled its logging pipeline to chew through 5 PB/day. The stack now leans on ClickHouse for speed and Apache Iceberg to keep storage costs sane.

Out went regex fingerprinting - slow and clumsy. In came a JFlex-generated lexer that actually keeps up. They also ditched generic serialization in favor of ClickHouse’s native protocol and sharded log tags to kill off unnecessary scans and trim query times.

Bigger picture: More teams are building bespoke ingest pipelines, tying high-throughput data flow to layered storage. Observability is getting the same kind of love as prod traffic. Finally.

Out went regex fingerprinting - slow and clumsy. In came a JFlex-generated lexer that actually keeps up. They also ditched generic serialization in favor of ClickHouse’s native protocol and sharded log tags to kill off unnecessary scans and trim query times.

Bigger picture: More teams are building bespoke ingest pipelines, tying high-throughput data flow to layered storage. Observability is getting the same kind of love as prod traffic. Finally.

medium.com

The authors discuss the development of a new automation infrastructure post-merger, leading to a unified automation project that can handle all cultures, languages, and clients efficiently. They chose Playwright over Cypress for its improved resource usage and faster execution times, aligning better with their expanding requirements. Additionally, they introduced a centralized npm package to address common needs across teams, reducing code duplication and maintenance costs while improving test reliability and readability.

govigilant.io

Vigilant runs distributed uptime checks with self-registering Go-based "outposts" scattered across the globe. Each one handles HTTP and Ping, reports back latency by region, and calls home over HTTPS.

The magic handshake? Vigilant plays root CA, handing out ephemeral TLS certs on the fly.

The magic handshake? Vigilant plays root CA, handing out ephemeral TLS certs on the fly.

medium.com

When working with Terraform in a team environment, common issues may arise such as state locking, version mismatches, untracked local applies, and lack of transparency. Atlantis is an open-source tool that can help streamline collaboration by automatically running Terraform commands based on GitHub pull requests. Setting up Atlantis locally with Docker and ngrok allows for a transparent and auditable process where every plan and apply is visible in the same place as the code review.

authress.io

During the AWS us-east-1 meltdown - when DynamoDB, IAM, and other key services went dark - Authress kept the lights on. Their trick? A ruthless edge-first, multi-region setup built for failure.

They didn’t hope DNS would save them. They wired in automated failover, rolled their own health checks, and watched business metrics - not just system metrics - for signs of trouble. That combo? It worked.

To chase a 99.999% SLA, they stripped out weak links, dodged flaky third-party services, and made all compute region-agnostic. DynamoDB Global Tables handled global state. CloudFront + Lambda@Edge took care of traffic sorcery.

The bigger picture: Reliability isn’t a load balancer’s job anymore. It starts in your architecture. Cloud provider defaults? Not enough. Build like you don’t trust them.

They didn’t hope DNS would save them. They wired in automated failover, rolled their own health checks, and watched business metrics - not just system metrics - for signs of trouble. That combo? It worked.

To chase a 99.999% SLA, they stripped out weak links, dodged flaky third-party services, and made all compute region-agnostic. DynamoDB Global Tables handled global state. CloudFront + Lambda@Edge took care of traffic sorcery.

The bigger picture: Reliability isn’t a load balancer’s job anymore. It starts in your architecture. Cloud provider defaults? Not enough. Build like you don’t trust them.

⚙️ Tools, Apps & Software

github.com

It is an open-source command-line utility that scans your AWS environment for unused resources—what we call "zombies"—that are quietly draining your cloud budget

github.com

Archlinux Kernel based on different schedulers and some other performance improvements.

github.com

A terminal-based AWS cost and resource dashboard built with Python and the Rich library. It provides an overview of AWS spend by account, service-level breakdowns, budget tracking, and EC2 instance summaries.

github.com

GitVex is a fully open-source serverless git hosting platform. No VMs, No Containers, Just Durable Objects and Convex.

🤔 Did you know?

Did you know that adoption of cloud-native environments is becoming enterprise-standard? In a 2025 industry survey, 82% of enterprises plan to use container-first/cloud-native platforms as their primary infrastructure within the next five years.

🤖 Once, SenseiOne Said

"We traded servers for APIs and kept the outages. Autoscaling hides backpressure until the budget alarms fire. SLOs are budgets for failure, not promises of safety."

— SenseiOne

— SenseiOne

⚡Growth Notes

Build the quiet habit of replaying every major incident like a black-box flight recorder review, focusing on your own thinking, not just the system’s behavior. After the next outage, spend 20 focused minutes writing three lists: what signals you saw, what assumptions you made, and what you would automate or observe differently next time. Turn each assumption that slowed you down into a small, repeatable check you bake into runbooks, dashboards, or alerts. This shifts you from chasing fire to codifying judgment, which is what actually separates senior SREs from everyone else. Do this after every serious incident for a month and you will quietly build incident instincts that compound for the rest of your career.

👤 This Week's Human

This week, we’re highlighting Steve McKinney, CEO of McKinney Consulting Inc., Executive Coach, and M&A Deal Originator working between Seoul and Charlotte. After 13 years in senior roles at Adidas and Reebok, he built a global executive search and leadership practice over 25+ years (via Kestria’s 40+ offices), coaching hundreds of leaders and shaping Proactive Agility and the forthcoming Global Mindset (Q1 2026). Featured by Forbes, Business Insider, and Yahoo Finance, his throughline is practical, cross-cultural decision-making under uncertainty.

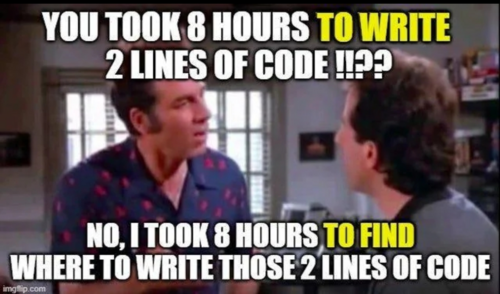

😂 Meme of the week