🔗 View in your browser. | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kaptain

#Kubernetes #Docker #DistributedSystems

🔍 Inside this Issue

The walls between data and orchestration are melting—SQL is calling the Kubernetes API, Snowflake is scheduling like a cluster, and GKE is leaning hard into inference. From KYAML sanity to Tailscale sidecars and seven-figure cost wins, the details here are the kind you can steal by Monday.

🛠️ Accessing the Kubernetes API from SQL Server 2025

⚡ AI inference supercharges on Google Kubernetes Engine

🌍 Cloud native is not just for hyperscalers

🕸️ How I eliminated networking complexity

💸 How We Saved $1.22 Million Annually on GCP Costs in a Few Simple Steps

🤖 Introducing Headlamp AI Assistant

❄️ Introducing Kubernetes for Snowflake

📜 Kubernetes Will Solve YAML Headaches with KYAML

🧩 MariaDB Kubernetes Operator 25.08.0 Adds AI Vector Support and Disaster Recovery Enhancements

🧠 The open source AI compute tech stack: Kubernetes + Ray + PyTorch + vLLM

You just leveled up your infra instincts—go cash it in.

Have a great week!

FAUN.dev Team

🛠️ Accessing the Kubernetes API from SQL Server 2025

⚡ AI inference supercharges on Google Kubernetes Engine

🌍 Cloud native is not just for hyperscalers

🕸️ How I eliminated networking complexity

💸 How We Saved $1.22 Million Annually on GCP Costs in a Few Simple Steps

🤖 Introducing Headlamp AI Assistant

❄️ Introducing Kubernetes for Snowflake

📜 Kubernetes Will Solve YAML Headaches with KYAML

🧩 MariaDB Kubernetes Operator 25.08.0 Adds AI Vector Support and Disaster Recovery Enhancements

🧠 The open source AI compute tech stack: Kubernetes + Ray + PyTorch + vLLM

You just leveled up your infra instincts—go cash it in.

Have a great week!

FAUN.dev Team

ℹ️ News, Updates & Announcements

insideainews.com

Cloudera acquired Taikun for seamless deployment of data and AI workloads in any environment. This move reinforces Cloudera's commitment to flexibility and innovation in managing complex IT infrastructures.

espresso.ai

Snowflake just leveled up its workload scheduler—now driven by LLMs and reinforcement learning. Instead of locking jobs to static warehouses, it predicts where to send them in real-time. Smarter routing, tighter hardware use, over 40% shaved off compute bills.

Bigger picture: Another nod toward ML-based orchestration in data infra. Think less cron job, more Kubernetes for your queries.

Bigger picture: Another nod toward ML-based orchestration in data infra. Think less cron job, more Kubernetes for your queries.

dqindia.com

CNCF just dropped an AI workload conformance program, built like the Kubernetes one—so AI tools play nice across clusters. Portability, meet your referee.

It’s tightening the loop between OpenTelemetry and OpenSearch, turning ad-hoc hacks into actual cross-project coordination. And Backstage and GitOps tooling? Getting a boost to better feed the platform engineering crowd.

It’s tightening the loop between OpenTelemetry and OpenSearch, turning ad-hoc hacks into actual cross-project coordination. And Backstage and GitOps tooling? Getting a boost to better feed the platform engineering crowd.

kubernetes.io

Headlamp just dropped an AI Assistant plugin that folds LLM-driven actions and queries straight into the Kubernetes UI. It taps into context-aware prompts to spot issues, restart deployments, and hunt down flaky pods—without leaving the interface.

System shift: This pushes Kubernetes toward intent-based ops. Less grinding through YAML. Less memorizing CLI incantations.

System shift: This pushes Kubernetes toward intent-based ops. Less grinding through YAML. Less memorizing CLI incantations.

hackernoon.com

MariaDB Kubernetes Operator 25.08.0 drops some real upgrades.

First up: physical backups. Now supported through native MariaDB tools and Kubernetes CSI snapshots—huge win if you're dealing with chunky datasets and tight recovery windows.

It also defaults to MariaDB 11.8, which brings in a native vector data type. That’s a clear nod to AI and RAG workloads—less hacky, more production-proof.

And there's a new Helm chart that bundles everything under one release. Clean, tight, deployable.

System shift: Physical snapshots and vector types aren’t just features—they’re a signal. MariaDB's aiming straight at cloud-native AI infra.

First up: physical backups. Now supported through native MariaDB tools and Kubernetes CSI snapshots—huge win if you're dealing with chunky datasets and tight recovery windows.

It also defaults to MariaDB 11.8, which brings in a native vector data type. That’s a clear nod to AI and RAG workloads—less hacky, more production-proof.

And there's a new Helm chart that bundles everything under one release. Clean, tight, deployable.

System shift: Physical snapshots and vector types aren’t just features—they’re a signal. MariaDB's aiming straight at cloud-native AI infra.

siliconangle.com

Google Cloud's pushing GKE beyond container orchestration, framing it as an AI inference engine. Meet the new crew: the Inference Gateway (smart load balancer, talks models and hardware), custom compute classes, and a Dynamic Workload Scheduler that tunes for both speed and spend.

The setup handles GPU and TPU-heavy bursts, plugs into TensorFlow and PyTorch, and keeps its cool during traffic spikes.

Big picture: Kubernetes isn’t just herding containers anymore. It's gunning to be the backbone of scaled AI.

The setup handles GPU and TPU-heavy bursts, plugs into TensorFlow and PyTorch, and keeps its cool during traffic spikes.

Big picture: Kubernetes isn’t just herding containers anymore. It's gunning to be the backbone of scaled AI.

🔗 Stories, Tutorials & Articles

medium.com

Arpeely chopped $140K/month off their cloud bill using a surgical mix of GCP tricks. Committed Use Discounts (CUDs) for high-availability services? Check. Smarter Kubernetes HPA configs? Definitely. Archiving old BigQuery data into GCS Archive? That one alone slashed storage costs 16x.

The real kicker: they rewired their HPA strategy. By capping scale events to 30% (or max 20 pods every 120s), they cut pod counts by 30%—with zero hits to uptime.

The real kicker: they rewired their HPA strategy. By capping scale events to 30% (or max 20 pods every 120s), they cut pod counts by 30%—with zero hits to uptime.

thenewstack.io

Kubernetes is eyeing a YAML remix. Version 1.34 may bring in KYAML—a stricter, YAML-compatible subset built to cut down on sloppy configs and sneaky formatting bugs.

KYAML keeps the good parts: comments, trailing commas, unquoted keys. But it dumps YAML’s whitespace drama. Existing manifests and Helm charts? Still work.

System shift: KYAML hints at a bigger move—Kubernetes leaning into its own domain-specific config format, tuned for automation and built to stay solid under pressure.

KYAML keeps the good parts: comments, trailing commas, unquoted keys. But it dumps YAML’s whitespace drama. Existing manifests and Helm charts? Still work.

System shift: KYAML hints at a bigger move—Kubernetes leaning into its own domain-specific config format, tuned for automation and built to stay solid under pressure.

spacelift.io

Kubernetes observability isn’t just about catching metrics or tailing logs. It’s about stitching together metrics, logs, and traces to see what’s actually happening—across services, over time, and through the chaos.

Thing is, Kubernetes doesn’t come with this built in. So teams hack together toolchains—Prometheus, ELK, Kubecost, take your pick—and battle the usual mess: noisy data, short-lived containers, silos everywhere. Insight doesn’t show up. You have to extract it.

Thing is, Kubernetes doesn’t come with this built in. So teams hack together toolchains—Prometheus, ELK, Kubecost, take your pick—and battle the usual mess: noisy data, short-lived containers, silos everywhere. Insight doesn’t show up. You have to extract it.

dbafromthecold.com

SQL Server 2025 rolls out spinvokeexternalrestendpoint, a new way to hit REST APIs straight from T-SQL. That includes calling the Kubernetes API—thanks to a reverse proxy in front.

The setup’s not exactly plug-and-play. You’ll need custom TLS certs, an nginx reverse proxy, and Kubernetes RBAC to keep things locked down while letting SQL talk to the cluster.

Bigger picture: SQL Server’s not just crunching rows anymore. It’s stepping into systems integration—wiring data logic into orchestrators like Kubernetes.

The setup’s not exactly plug-and-play. You’ll need custom TLS certs, an nginx reverse proxy, and Kubernetes RBAC to keep things locked down while letting SQL talk to the cluster.

Bigger picture: SQL Server’s not just crunching rows anymore. It’s stepping into systems integration—wiring data logic into orchestrators like Kubernetes.

paulwelty.com

A fresh pattern’s gaining traction: Docker + Tailscale sidecars replacing old-school reverse proxies and clunky VPNs. Each service runs as its own mesh-routed node, containerized and independent.

The trick? Network namespace sharing. App containers hook into the Tailscale mesh with no exposed ports, no shared networks. You keep Docker’s isolation and still get zero-trust access across 15+ services.

Big shift: Say goodbye to port-forwarding and central VPN headaches. Say hello to decentralized, identity-driven access—spun up in plain Compose files.

The trick? Network namespace sharing. App containers hook into the Tailscale mesh with no exposed ports, no shared networks. You keep Docker’s isolation and still get zero-trust access across 15+ services.

Big shift: Say goodbye to port-forwarding and central VPN headaches. Say hello to decentralized, identity-driven access—spun up in plain Compose files.

🎦 Videos, Talks & Presentations

youtube.com

AI workloads are computationally demanding. They require scale for both compute and data, and they require unprecedented heterogeneity across workloads, models, data types, and hardware accelerators. As a consequence, the software stack for running compute-intensive AI workloads is fragmented and rapidly evolving. Companies that productionize AI end up building large AI platform teams to manage these workloads. However, within the fragmented landscape, common patterns are beginning to emerge. An emerging software stack combines Kubernetes, Ray, PyTorch, and vLLM. This talk describes the role of each of these frameworks, how they operate together, and illustrates this combination with case studies from Pinterest, Uber, and Roblox.

⚙️ Tools, Apps & Software

github.com

KLogger is a Python CLI application designed to efficiently collect and organize logs from Kubernetes deployments within a specified namespace.

github.com

A Kubernetes mutating admission webhook that automatically rewrites container image references to use pull-through cache registries (like AWS ECR Pull Through Cache).

🤔 Did you know?

In Kubernetes, the lifecycle of a Pod termination is carefully orchestrated: the `preStop` hook is executed before the SIGTERM signal is sent to the container. The kubelet waits for the hook to complete (within `terminationGracePeriodSeconds`, plus a grace extension if needed), and only then delivers SIGTERM—waiting further before finally issuing a SIGKILL if the container hasn't exited. To prevent disruptions during shutdowns, a robust pattern is to mark the Pod as NotReady within your `preStop` hook (e.g. by flipping readiness), allowing endpoints and load balancers to drain traffic before your application handles SIGTERM and exits gracefully.

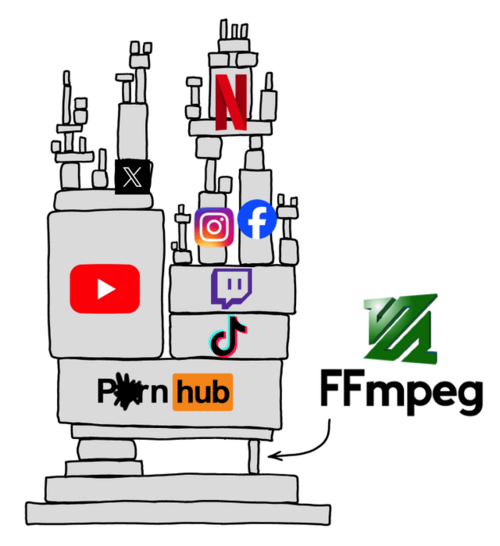

😂 Meme of the week

👤 This Week's Human

This Week’s Human is Andrew Foe, founder building NVIDIA-powered edge datacenters at HyperAI and a 20+ year cloud/AI practitioner. He’s helped hosting partners become cloud providers, led IoDis to NVIDIA Elite Partner status, and ships sustainable GPU infrastructure (A100/H100/L40S). Previously with Dell, HP, Lenovo, and more, he’s worked with teams at Booking.com, Leaseweb, and AMS-IX to turn complex infrastructure into systems that actually run.