🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

🔍 Inside this Issue

This one runs on contrasts: trillion‑dollar scaling wobble vs. 80k‑token discipline, programmable research agents vs. rhyme‑powered jailbreaks, and red‑team scars next to math‑driven interpretability. If you care about shipping responsibly, the links below will tighten your stack, widen your perspective, and nudge you toward better defaults.

🚏 200k Tokens Is Plenty

💸 A trillion dollars is a terrible thing to waste

🧪 Gemini Deep Research Is Now Programmable Through a New API

⚡ GPT-5.2 Quietly Beats Human Experts at Knowledge Work

🛡️ Practical LLM Security Advice from the NVIDIA AI Red Team

🧩 Roses are red, violets are blue, if you phrase it as poem, any jailbreak will do

🧰 So you wanna build a local RAG?

🧮 Learning Collatz - The Mother of all Rabbit Holes

🧭 Prompts for Open Problems

🎨 How to Create an Effective Prompt for Nano Banana Pro

Sharper instincts, fewer footguns, now turn it into momentum.

See you in the next issue!

FAUN.dev() Team

🚏 200k Tokens Is Plenty

💸 A trillion dollars is a terrible thing to waste

🧪 Gemini Deep Research Is Now Programmable Through a New API

⚡ GPT-5.2 Quietly Beats Human Experts at Knowledge Work

🛡️ Practical LLM Security Advice from the NVIDIA AI Red Team

🧩 Roses are red, violets are blue, if you phrase it as poem, any jailbreak will do

🧰 So you wanna build a local RAG?

🧮 Learning Collatz - The Mother of all Rabbit Holes

🧭 Prompts for Open Problems

🎨 How to Create an Effective Prompt for Nano Banana Pro

Sharper instincts, fewer footguns, now turn it into momentum.

See you in the next issue!

FAUN.dev() Team

⭐ Patrons

faun.dev

Hey there,

We’re extending the 25% discount for Building with GitHub Copilot and all our courses on FAUN.Sensei(). The last activation window was short, so we’re giving everyone more time to use it.

Building with GitHub Copilot is a practical, in-depth guide to working with Copilot as more than an autocomplete tool. It shows how to turn Copilot into a genuine coding partner that can navigate your codebase, reason across files, generate tests, review pull requests, explain changes, automate tasks, and even operate as an autonomous agent when needed.

You'll learn how to customize Copilot for your team, how to use instruction files effectively, how to integrate GitHub CLI, how to extend capabilities with GitHub Extensions or MCP servers and more!

The course covers the full Copilot feature set and shows how to use it effectively to improve your productivity, including lesser-known capabilities and advanced options.

⏳ Use the coupon SENSEI2525 before it expires on December 31. After that, the discount ends.

We’re extending the 25% discount for Building with GitHub Copilot and all our courses on FAUN.Sensei(). The last activation window was short, so we’re giving everyone more time to use it.

Building with GitHub Copilot is a practical, in-depth guide to working with Copilot as more than an autocomplete tool. It shows how to turn Copilot into a genuine coding partner that can navigate your codebase, reason across files, generate tests, review pull requests, explain changes, automate tasks, and even operate as an autonomous agent when needed.

You'll learn how to customize Copilot for your team, how to use instruction files effectively, how to integrate GitHub CLI, how to extend capabilities with GitHub Extensions or MCP servers and more!

The course covers the full Copilot feature set and shows how to use it effectively to improve your productivity, including lesser-known capabilities and advanced options.

⏳ Use the coupon SENSEI2525 before it expires on December 31. After that, the discount ends.

ℹ️ News, Updates & Announcements

faun.dev

OpenAI just dropped GPT-5.2, now the default for paying users in ChatGPT and the API. Big lift in code gen, multi-modal reasoning, and workflow orchestration.

It smokes benchmarks like GDPval and SWE-Bench Pro, knocks 40–60 minutes off daily tasks, and speeds things up with smarter input caching.

The bigger shift? GPT-5.2 pushes foundation models deeper into full-stack dev territory, less bouncing between tools, more doing everything through one brain.

It smokes benchmarks like GDPval and SWE-Bench Pro, knocks 40–60 minutes off daily tasks, and speeds things up with smarter input caching.

The bigger shift? GPT-5.2 pushes foundation models deeper into full-stack dev territory, less bouncing between tools, more doing everything through one brain.

faun.dev

Google dropped the Gemini Deep Research agent through its Interactions API. Devs can now plug serious autonomous research flows, fueled by Gemini 3 Pro, into their apps. It tackles gnarly, multi-hop queries and spits out citeable results.

Built for gnarlier use cases, it benchmarks strong on DeepSearchQA, which just got open-sourced for folks who want to poke at it.

Built for gnarlier use cases, it benchmarks strong on DeepSearchQA, which just got open-sourced for folks who want to poke at it.

⭐ Sponsors

bytevibe.co

The Never :q! Hoodie is for developers who know that quitting is rarely the right command. Soft, warm, and built for long sessions, it features a plush cotton-poly blend, a classic fit, and a message every Vim user understands.

🎁 Use SUBSCR1B3R for a limited 25% discount

ℹ️ The coupon applies to all other products as well.

⏳Offer ends December 31

🎁 Use SUBSCR1B3R for a limited 25% discount

ℹ️ The coupon applies to all other products as well.

⏳Offer ends December 31

🔗 Stories, Tutorials & Articles

the-decoder.com

A new study just broke the safety game wide open: rhymed prompts slipped past filters in 25 major LLMs, including Gemini 2.5 Pro and Deepseek - with up to 100% success. No clever chaining, no jailbreak soup. Just single-shot rhyme.

Turns out, poetic language isn’t just for bard-core Twitter. When it comes to triggering unsafe outputs, especially around cyberattacks or data leaks, rhymes triple success rates compared to plain prose.

Turns out, poetic language isn’t just for bard-core Twitter. When it comes to triggering unsafe outputs, especially around cyberattacks or data leaks, rhymes triple success rates compared to plain prose.

developer.nvidia.com

NVIDIA’s AI Red Team nailed three security sinkholes in LLMs: reckless use of exec/eval, RAG pipelines that grab too much data, and markdown that doesn't get cleaned. These cracks open doors to remote code execution, sneaky prompt injection, and link-based data leaks.

The fix-it trend: App security’s leaning hard into sandboxed runtimes, tighter data perms, and markdown that can’t stab you.

The fix-it trend: App security’s leaning hard into sandboxed runtimes, tighter data perms, and markdown that can’t stab you.

garymarcus.substack.com

OpenAI co-founder Ilya Sutskever just said the quiet part out loud: scaling laws are breaking down. Bigger models aren’t getting better at thinking, they’re getting worse at generalizing and reasoning.

Now he’s eyeing neurosymbolic AI and innate inductive constraints. Yep, the “just make it huge” era might be over.

Now he’s eyeing neurosymbolic AI and innate inductive constraints. Yep, the “just make it huge” era might be over.

testingcatalog.com

Google’s been quietly field-testing two shadow models, Fierce Falcon and Ghost Falcon, on LM Arena. Early signs? They're probably warm-ups for the next Gemini 3 Flash or Pro drop. Classic Google move: float a checkpoint, stir up curiosity, then go GA.

argmin.net

The author, Ben Recht, proposes five research directions inspired by his graduate machine learning class, arguing for different research rather than just more. These prompts include adopting a design-based view for decision theory, explaining the robust scaling trends in competitive testing, and moving beyond average case evaluation. Crucially, he calls for optimization innovations to improve LLM reasoning efficiency and views the development of high-performing open-source, open-corpus language models requiring minimal compute as the most vital applied problem.

ampcode.com

Amp’s team isn’t chasing token limits. Even with ~200k available via Opus 4.5, they stick to short, modular threads, around 80k tokens each.

Why? Smaller threads are cheaper, more stable, and just work better. Instead of stuffing everything into a single mega-context, they slice big tasks into focused pieces. Cleaner scope, faster runs, fewer surprises.

Why? Smaller threads are cheaper, more stable, and just work better. Instead of stuffing everything into a single mega-context, they slice big tasks into focused pieces. Cleaner scope, faster runs, fewer surprises.

axiommath.ai

Researchers trained small transformer models to predict the "long Collatz step," an arithmetic rule for the infamous unsolved Collatz conjecture, achieving surprisingly high accuracy up to 99.8%. The models did not learn the universal algorithm, but instead showed quantized learning, mastering specific input classes defined by their binary structure. Error analysis revealed that mistakes were systematic and explainable by simple rules, demonstrating that transformers can learn complex arithmetic functions by focusing on special cases rather than hallucinating. This study provides a new method for AI interpretability by leveraging the known mathematical structure of the problem to analyze the model's learning process.

portfolio-blog-starter.vercel.app

Skald spun up a full local RAG stack, with pgvector, Sentence Transformers, Docling, and llama.cpp, in under 10 minutes. The thing hums on English point queries. Benchmarks show open-source models and rerankers can go toe-to-toe with SaaS tools in most tasks. They stumble, though, on multilingual prompts and cross-doc mashups.

radicalcuriosity.xyz

The author details how to effectively prompt Google’s Nano Banana Pro, a visual reasoning model, emphasizing that success relies on structured design documents rather than vague requests. The method prioritizes four key steps: defining the Work Surface (e.g., dashboard or comic), specifying the precise Layout, listing all required Components, and enforcing strict Constraints to maintain consistency. This approach leverages the model's distinct engines (like Layout, Typography, and Style) to create complex, structurally coherent visual artifacts, proven through an ambitious comic book adaptation project.

⚙️ Tools, Apps & Software

github.com

RunAgent simplifies serverless deployment of your AI agents. With a powerful CLI, multi-language SDK support, built-in agent invocation & streaming suppprt.

github.com

An open-source, code-first Go toolkit for building, evaluating, and deploying sophisticated AI agents with flexibility and control.

🤔 Did you know?

Did you know that PyTorch’s torch.use_deterministic_algorithms(True) alone does not guarantee deterministic GPU matrix multiplies on CUDA >= 10.2? To force bit-wise reproducibility for GEMM and related cuBLAS ops, you must also set the environment variable CUBLAS_WORKSPACE_CONFIG to either :4096:8 or :16:8, which switches cuBLAS into deterministic workspace modes.

Without this setting those GEMM calls remain nondeterministic even with deterministic flags enabled and can throw errors about non-deterministic behavior. Many practitioners also turn off torch.backends.cudnn.benchmark and enable torch.backends.cudnn.deterministic to keep cuDNN from auto-tuning algorithms between runs.

Without this setting those GEMM calls remain nondeterministic even with deterministic flags enabled and can throw errors about non-deterministic behavior. Many practitioners also turn off torch.backends.cudnn.benchmark and enable torch.backends.cudnn.deterministic to keep cuDNN from auto-tuning algorithms between runs.

🤖 Once, SenseiOne Said

"A deployed model is just a set of assumptions about data with a checksum. MLOps is the work of proving when those assumptions have expired, not retraining on a calendar."

— SenseiOne

— SenseiOne

⚡Growth Notes

Build a monitoring loop for your skills the same way you would for a production ML system: every quarter, define 1 or 2 high-impact capabilities, log real usage, and track output quality, latency, and failure modes.

Cut manual glue work (hand-rolled data preprocessing, ad-hoc eval, scattered notebooks) as if they were scaling bottlenecks, and deliberately replace them with shared, tested components.

Add redundancy in your strengths by going one level deeper in one axis (optimization, evaluation, or infra) while going one level wider in AI tooling (at least one deployment stack and one orchestration framework).

As a daily habit, spend 30 minutes turning one messy experiment into a reusable, observable pipeline with metrics and clear interfaces.

Over a year, that habit compounds into a portfolio where your value is not your code snippets, but the robust ML systems and APIs that survive changing models, vendors, and managers.

Cut manual glue work (hand-rolled data preprocessing, ad-hoc eval, scattered notebooks) as if they were scaling bottlenecks, and deliberately replace them with shared, tested components.

Add redundancy in your strengths by going one level deeper in one axis (optimization, evaluation, or infra) while going one level wider in AI tooling (at least one deployment stack and one orchestration framework).

As a daily habit, spend 30 minutes turning one messy experiment into a reusable, observable pipeline with metrics and clear interfaces.

Over a year, that habit compounds into a portfolio where your value is not your code snippets, but the robust ML systems and APIs that survive changing models, vendors, and managers.

👤 This Week's Human

This Week’s Human is Shannon Atkinson, a DevOps & Automation specialist with 15+ years building Kubernetes and CI/CD systems across AWS, Azure, and GCP, and a Certified Jenkins Engineer and patent holder. At Realtor.com, Shannon migrated mobile CI/CD from Bitrise to CircleCI, boosting delivery by 20%; at Salesforce, built a B2B2C platform serving 100M+ users; at Zapproved, developed automation that scaled systems 40% and cut manual work from hours to minutes.

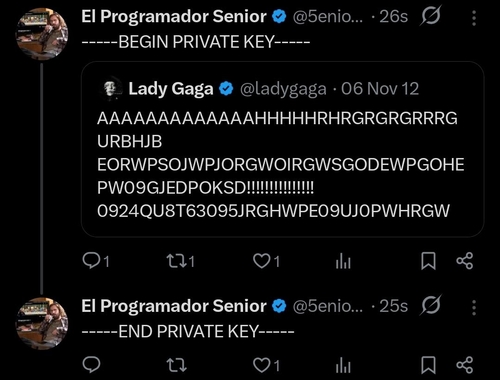

😂 Meme of the week