🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

🔍 Inside this Issue

Speed is outrunning safety: agents hop repos, GitHub’s social contract wobbles, and ‘vibe’ code keeps seniors on cleanup duty. The counter‑move is here—SLMs on the edge, formal verification, real evals, and an agent‑first stack you can actually run; details await inside.

🦠 AgentHopper: An AI Virus

🪶 Building Agents for Small Language Models: A Deep Dive into Lightweight AI

🐙 GitHub Copilot on autopilot as community complaints persist

📇 Introducing the MCP Registry

🛡️ Guardians of the Agents

🧪 LLM Evaluation: Practical Tips at Booking.com

🏗️ The LinkedIn Generative AI Application Tech Stack: Extending to Build AI Agents

🔬 Understanding LLMs: Insights from Mechanistic Interpretability

🍼 Vibe coding has turned senior devs into ‘AI babysitters,’ but they say it’s worth it

🧭 You Vibe It, You Run It?

Build fast, keep your hands on the wheel—the robots don’t answer the pager.

Have a great week!

FAUN.dev Team

🦠 AgentHopper: An AI Virus

🪶 Building Agents for Small Language Models: A Deep Dive into Lightweight AI

🐙 GitHub Copilot on autopilot as community complaints persist

📇 Introducing the MCP Registry

🛡️ Guardians of the Agents

🧪 LLM Evaluation: Practical Tips at Booking.com

🏗️ The LinkedIn Generative AI Application Tech Stack: Extending to Build AI Agents

🔬 Understanding LLMs: Insights from Mechanistic Interpretability

🍼 Vibe coding has turned senior devs into ‘AI babysitters,’ but they say it’s worth it

🧭 You Vibe It, You Run It?

Build fast, keep your hands on the wheel—the robots don’t answer the pager.

Have a great week!

FAUN.dev Team

ℹ️ News, Updates & Announcements

blog.modelcontextprotocol.io

The new Model Context Protocol (MCP) Registry just dropped in preview. It’s a public, centralized hub for finding and sharing MCP servers—think phonebook, but for AI context APIs. It handles public and private subregistries, publishes OpenAPI specs so tooling can play nice, and bakes in community-driven moderation with flagging and denylists.

System shift: This locks in a new standard. MCP infrastructure now has a common ground for discovery, queries, and federation across the stack.

System shift: This locks in a new standard. MCP infrastructure now has a common ground for discovery, queries, and federation across the stack.

theregister.com

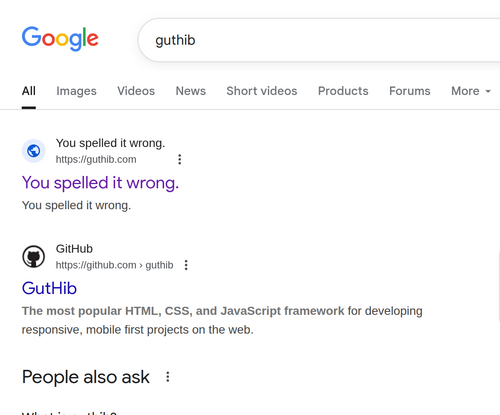

GitHub's biggest debates right now? Whether to shut down AI-generated noise from Copilot—stuff like auto-written issues and code reviews. No clear answers from GitHub yet.

Frustration is piling up. Some devs are replacing the platform altogether, shifting their projects to Codeberg or spinning up self-hosted Forgejo stacks to take back control.

System shift: The more GitHub leans into AI, the more it nudges people out. That old network effect? Starting to crack.

Frustration is piling up. Some devs are replacing the platform altogether, shifting their projects to Codeberg or spinning up self-hosted Forgejo stacks to take back control.

System shift: The more GitHub leans into AI, the more it nudges people out. That old network effect? Starting to crack.

techcrunch.com

Fastly says 95% of developers spend extra time fixing AI-written code. Senior engineers take the brunt. That overhead has even spawned a new gig: “vibe code cleanup specialist.” (Yes, seriously.)

As teams lean harder on AI tools, reliability and security start to slide—unless someone steps in. The result? A quiet overhaul of the dev pipeline. QA gets heavier. The line between automation and ownership? Blurry at best.

As teams lean harder on AI tools, reliability and security start to slide—unless someone steps in. The result? A quiet overhaul of the dev pipeline. QA gets heavier. The line between automation and ownership? Blurry at best.

embracethered.com

In the “Month of AI Bugs,” researchers poked deep and found prompt injection holes bad enough to run arbitrary code on major AI coding tools—GitHub Copilot, Amazon Q, and AWS Kiro all flinched.

They didn’t stop at theory. They built AgentHopper, a proof-of-concept AI virus that leapt between agents via poisoned repos. The trick? Conditional payloads aimed at shared weak spots like self-modifying config access.

They didn’t stop at theory. They built AgentHopper, a proof-of-concept AI virus that leapt between agents via poisoned repos. The trick? Conditional payloads aimed at shared weak spots like self-modifying config access.

linkedin.com

LinkedIn tore down its GenAI stack and rebuilt it for scale—with agents, not monoliths. The new setup leans on distributed, gRPC-powered systems. Central skill registry? Check. Message-driven orchestration? Yep. It’s all about pluggable parts that play nice together.

They added sync and async modes for invoking agents, wired in OpenTelemetry for observability that actually tells you things, and embraced open protocols like MCP and A2A to stay friendly with the rest of the ecosystem.

System shift: Think less "giant LLM in a box" and more "team of agents working in sync, speaking a shared language, and running on real infrastructure."

They added sync and async modes for invoking agents, wired in OpenTelemetry for observability that actually tells you things, and embraced open protocols like MCP and A2A to stay friendly with the rest of the ecosystem.

System shift: Think less "giant LLM in a box" and more "team of agents working in sync, speaking a shared language, and running on real infrastructure."

github.blog

GitHub just rolled out the MCP Registry—a hub for finding Model Context Protocol (MCP) servers without hunting through scattered corners of the internet. No more siloed lists or mystery URLs. It's all in one place now.

The goal? Cleaner access to AI agent tools, plus a path toward self-publishing, thanks to GitHub’s work with the MCP Steering Committee.

The goal? Cleaner access to AI agent tools, plus a path toward self-publishing, thanks to GitHub’s work with the MCP Steering Committee.

🔗 Stories, Tutorials & Articles

msuiche.com

Agent engineering with small language models (SLMs)—anywhere from 270M to 32B parameters—calls for a different playbook. Think tight prompts, offloaded logic, clean I/O, and systems that don’t fall apart when things go sideways.

The newer stack—GGUF + llama.cpp—lets these agents run locally on CPUs or GPUs. No cloud, no latency tax. Just edge devices pulling their weight.

The newer stack—GGUF + llama.cpp—lets these agents run locally on CPUs or GPUs. No cloud, no latency tax. Just edge devices pulling their weight.

queue.acm.org

A new static verification framework wants to make runtime safeguards look lazy. It slaps mathematical safety proofs onto LLM-generated workflows before they run—no more crossing fingers at execution time.

The setup decouples code from data, then runs checks with tools like CodeQL and Z3. If the workflow tries shady stuff—like unsafe tool calls—it gets blocked during generation, not after.

The setup decouples code from data, then runs checks with tools like CodeQL and Z3. If the workflow tries shady stuff—like unsafe tool calls—it gets blocked during generation, not after.

lesswrong.com

LLMs generate text by predicting the next word using attention to capture context and MLP layers to store learned patterns. Mechanistic interpretability shows these models build circuits of attention and features, and tools like sparse autoencoders and attribution graphs help unpack superposition, revealing how tasks are actually computed.

mlops.community

Booking.com built Judge-LLM, a framework where strong LLMs evaluate other models against a carefully curated golden dataset. Clear metric definitions, rigorous annotation, and iterative prompt engineering make evaluations more scalable and consistent than relying solely on humans.

The takeaway: Robust LLM evaluation isn’t just about scores—it requires well-defined metrics, trusted judges, and disciplined processes to be reliable in production.

The takeaway: Robust LLM evaluation isn’t just about scores—it requires well-defined metrics, trusted judges, and disciplined processes to be reliable in production.

uptimelabs.io

“You Vibe It, You Run It?” explores the rise of Vibe Coding—writing software by prompting an LLM instead of programming. While impressive for prototyping, the article argues it’s not just “a higher abstraction” but a competitive cognitive artefact: it produces working code without helping developers build mental models. That creates risks around non-determinism, maintainability, resilience, and the slow erosion of engineering skill.

The takeaway: Vibe Coding has real value for rapid prototypes and experimentation, but relying on it for production systems without deep ownership (“you build it, you run it”) risks fragility and technical debt. The piece urges caution, comparing it to sat-nav dependency—powerful, but at the cost of losing your own map.

The takeaway: Vibe Coding has real value for rapid prototypes and experimentation, but relying on it for production systems without deep ownership (“you build it, you run it”) risks fragility and technical debt. The piece urges caution, comparing it to sat-nav dependency—powerful, but at the cost of losing your own map.

⚙️ Tools, Apps & Software

github.com

Lemonade helps users run local LLMs with the highest performance by configuring state-of-the-art inference engines for their NPUs and GPUs.

github.com

Agent Laboratory is an end-to-end autonomous research workflow meant to assist you as the human researcher toward implementing your research ideas

🤔 Did you know?

Did you know NVIDIA A100 and H100 GPUs can double speed using a built-in “2:4 sparsity” trick? It works when half the weights are pruned in a regular pattern, and libraries like TensorRT or cuSPARSELt prepare the model so the hardware can use this fast path.

🤖 Once, SenseiOne Said

"Optimize for rapid retraining and you lose reproducibility; optimize for reproducibility and you lose adaptability. MLOps doesn't remove this trade-off; it forces you to choose it deliberately."

— SenseiOne

— SenseiOne

👤 This Week's Human

This Week’s Human is Ben Sheppard, a 4x founder, Strategic Advisor & Business Coach, and co‑founder of Silta AI building sector‑specific tools for the infrastructure stack. He’s shipped AI due‑diligence and ESG workflows that cut review time by 60%+, including helping a multilateral bank compress a weeks‑long assessment to under two hours. He also advises institutions like the Asian Development Bank, USAID, and Bloomberg Philanthropies, pairing grounded judgment with execution across complex projects.

😂 Meme of the week