🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

📝 A Few Words

Balancing byte-size breakthroughs and deep dives, this issue uncorks efficiency tips and defense tools like never before. From Google's innovative SecOps runbooks to edge computing's frontier feats, we're mining the mindset shifts poised to reshape your dev arsenal.

🔗 Agentic Coding Recommendations

🛡️ AI Runbooks for Google SecOps

⚙️ Automate Models Training with Tekton and Buildpacks

📊 BenchmarkQED for RAG systems

💬 Chat with your AWS Bill

🌐 GenAI Meets SLMs: A New Era for Edge Computing

🍴 God is hungry for Context: First thoughts on o3 pro

🤝 Meta reportedly in talks to invest billions in Scale AI

🤖 Modern Test Automation with AI(LLM) and Playwright

👥 What execs want to know about multi-agentic systems

Read. Think. Deploy. Dance with disruption while the world catches up.

Have a great week!

FAUN Team

🔗 Agentic Coding Recommendations

🛡️ AI Runbooks for Google SecOps

⚙️ Automate Models Training with Tekton and Buildpacks

📊 BenchmarkQED for RAG systems

💬 Chat with your AWS Bill

🌐 GenAI Meets SLMs: A New Era for Edge Computing

🍴 God is hungry for Context: First thoughts on o3 pro

🤝 Meta reportedly in talks to invest billions in Scale AI

🤖 Modern Test Automation with AI(LLM) and Playwright

👥 What execs want to know about multi-agentic systems

Read. Think. Deploy. Dance with disruption while the world catches up.

Have a great week!

FAUN Team

⭐ Patrons

manageengine.com

Your cloud just got smarter. Applications Manager now dives deeper into Azure with 30+ added services, expanding our cloud catalog to 100+ services! This means unmatched visibility for proactive management, optimized performance, and robust security across Azure and AWS. See what you've been missing – Download today!

ℹ️ News, Updates & Announcements

openai.com

OpenAI's June 2025 report, "Disrupting Malicious Uses of AI," is out. It highlights various cases where AI tools were exploited for deceptive activities, including social engineering, cyber espionage, and influence operations.

latent.space

OpenAI just took an axe to o3 pricing—down 80%. Enter o3-pro with its $20/$80 show. They boast a star-studded 64% win rate against o3. Forget Opus; o3-pro nails picking the right tools and reading the room, flipping task-specific LLM apps on their heads.

marktechpost.com

Reinforcement Learning fine-tunes large language models for better performance by adapting outputs based on structured feedback. Scaling RL for LLMs faces resource challenges due to massive computation, model sizes, and engineering problems like GPU idle time. Meta's LlamaRL is a PyTorch-based asynchronous framework that offloads generation, optimizes memory use, and achieves significant speedups in training massive LLMs. Speedups up to 10.7x on 405B parameter models demonstrate LlamaRL's ability to address memory constraints, communication delays, and GPU inefficiencies in the training process.

openai.com

OpenAI is challenging a court order stemming from The New York Times' copyright lawsuit, which mandates the indefinite retention of user data from ChatGPT and API services. OpenAI contends this requirement violates user privacy commitments and sets a concerning precedent. While the company complies with the order under legal obligation, it has appealed the decision to uphold its privacy standards. Notably, the order excludes ChatGPT Enterprise, Edu customers, and API users with Zero Data Retention agreements

techcrunch.com

Meta wants a piece of the $10 billion pie at Scale AI, diving headfirst into the largest private AI funding circus yet. Scale AI's revenue? Projected to rocket from last year’s $870M to $2 billion this year, thanks to some beefy partnerships and serious AI model boot camps.

⭐ Sponsors

faun.dev

Each week, we’ll spotlight one person from our community — a developer, DevOps engineer, SRE, AI/ML/data person, open source maintainer, or someone building cool things behind the scenes.

We’ll share who they are and where you can follow or connect with them. Not a sponsored feature. Just good people doing good work!

🔔 Read more!

We’ll share who they are and where you can follow or connect with them. Not a sponsored feature. Just good people doing good work!

🔔 Read more!

🔗 Stories, Tutorials & Articles

medium.com

Meta-agent architecture unleashes AI agents to craft, sharpen, and supercharge other agents—leaving static models in the dust. Amazingly, within a mere 60 seconds, one agent slashes response times by 40% and boosts accuracy by 23%. The kicker? It keeps learning from real data—no human nudges needed.

microsoft.com

BenchmarkQED takes RAG benchmarking to another level. Imagine LazyGraphRAG smashing through competition—even when wielding a hefty 1M-token context. The only hitch? It occasionally stumbles on direct relevance for local queries. But fear not, AutoQ is in its corner, crafting a smorgasbord of synthetic queries that hammer out consistent, fair RAG assessments, shrugging off dataset quirks like a seasoned pro.

medium.com

Google's MCP servers arm SecOps teams with direct control of security tools using LLMs. Now, analysts can skip the fluff and get straight to work—no middleman needed. The system ties runbooks to live data, offering automated, role-specific security measures. The result? A fusion of top-tier protocols with AI precision, making the security scene a little less chaotic and a lot more effective.

lucumr.pocoo.org

Claude Code at $100/month smirks at the spendy Opus. It excels at spinning tasks with the nimble Sonnet model. When it comes to backend projects, lean into Go. It sidesteps Python's pitfalls—clearer to LLMs, rooted context, and less chaos in its ecosystem. Steer clear of pointless upgrades. Those tempting agent upgrade paths? They often end in a technological mess.

docker.com

Docker Model Runner struts out with new tricks: tag, push, and package commands. Want to pass around AI models like they're hot potatoes? Now you can. They're OCI artifacts now, slotting smoothly into your workflow like it was always meant to be.

kailash-pathak.medium.com

GenAI and Playwright MCP are shaking up test automation. Think natural language scripts and real-time adaptability, kicking flaky tests to the curb. But watch your step: security risks lurk, server juggling causes headaches, and dynamic UIs refuse to play nice.

thenewstack.io

SLMs power up edge computing with speed and privacy finesse. They master real-time decisions and steal the spotlight in cramped settings like telemedicine and smart cities. On personal devices, they outdo LLMs—trimming the fat with model distillation and quantization. Equipped with ONNX and MediaPipe, they're cross-platform prodigies. Federated learning? Keeps data secure and regulators grinning. Across industries like healthcare and fintech, SLMs crank up security, amp analytics, and groove through multiple languages without gobbling resources.

jarbon.medium.com

4-Shot Testing Flow fuses AI's lightning-fast knack for spotting issues with the human knack for sniffing out those sneaky, context-heavy bugs. Trim QA time and expenses. While AI tears through broad test execution, human testers sharpen the lens, snagging false positives/negatives before they slip through—ideal even for scrappy startups.

towardsdatascience.com

Tekton plus Buildpacks: your secret weapon for training GPT-2 without Dockerfile headaches. They wrap your code in containers, ensuring both security and performance. Tekton Pipelines lean on Kubernetes tasks to deliver isolation and reproducibility. Together, they transform CI/CD for ML into something almost magical—no sleight of hand required.

docs.hatchet.run

Go rules the realm of long-lived, concurrent agent tasks. Its lightning-fast goroutines and petite memory use make Node.js and Python look like clunky dinosaurs trudging through thick mud. And don't get started on its cancellation mechanism—seamless cancelation, zero drama.

community.aws

Chat up your AWS bill using Amazon Q CLI. Get savvy cost optimization tips and let MCP untangle tricky questions—like how much your EBS storage is bleeding you dry.

cyberark.com

Anthropic's MCP makes LLMs groove with real-world tools but leaves the backdoor wide open for mischief. Full-Schema Poisoning (FSP) waltzes across schema fields like it owns the place. ATPA sneaks in by twisting tool outputs, throwing off detection like a pro magicians’ misdirection. Keep your eye on the ball with vigilant monitoring and lean on zero-trust models.

cloud.google.com

Lack of resources kills agent teamwork in Multi-Agent Systems (MAS); clear roles and protocols rule the roost—plus a dash of rigorous testing and good AI behavior. Ignore bias, and your MAS could accidentally nudge e-commerce into the murky waters of socio-economic unfairness. Cue reputation hits and half a year repairing the mess.

heemeng.medium.com

Cursor wrestled with flaky tests, tangled in its over-reliance on XPath. A shift to data-testid finally tamed the chaos. Though it tackled some UI tests, expired API tokens and timestamped transactions revealed its Achilles' heel.

⚙️ Tools, Apps & Software

github.com

Automating Code Generation from Scientific Papers in Machine Learning

github.com

AI-Powered Wiki Generator for /Gitlab/Bitbucket Repositories.

github.com

CoRT makes AI models recursively think about their responses, generate alternatives, and pick the best one. It's like giving the AI the ability to doubt itself and try again... and again... and again.

🤔 Did you know?

Did you know that Pinterest migrated their ETL backbone from Amazon EMR to a custom Spark-on-Kubernetes platform called Moka, running on AWS EKS? This shift enabled them to gain better control over job scheduling and resource allocation, using Apache YuniKorn for fine-grained, hierarchical scheduling. While they haven’t published exact performance figures, Pinterest reported improvements in system availability, hardware cost efficiency, and operational capabilities—empowering them to support the data needs of over 400 million users with greater flexibility.

🤖 Once, SenseiOne Said

"In an era where code strives to be both author and artifact, our greatest task is not to outthink machines, but to question the paths they don't take."

— Sensei

— Sensei

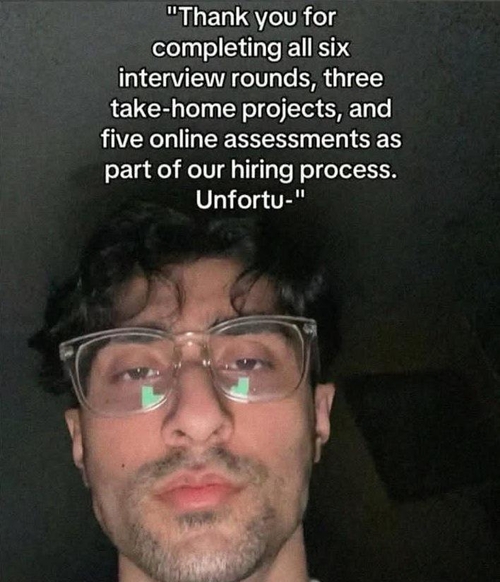

😂 Meme of the week