| |

| ℹ️ News, Updates & Announcements |

| |

|

| |

| Introducing the MCP Registry |

| |

| |

The new Model Context Protocol (MCP) Registry just dropped in preview. It’s a public, centralized hub for finding and sharing MCP servers—think phonebook, but for AI context APIs. It handles public and private subregistries, publishes OpenAPI specs so tooling can play nice, and bakes in community-driven moderation with flagging and denylists.

System shift: This locks in a new standard. MCP infrastructure now has a common ground for discovery, queries, and federation across the stack. |

|

| |

|

| |

|

| |

| GitHub Copilot on autopilot as community complaints persist |

| |

| |

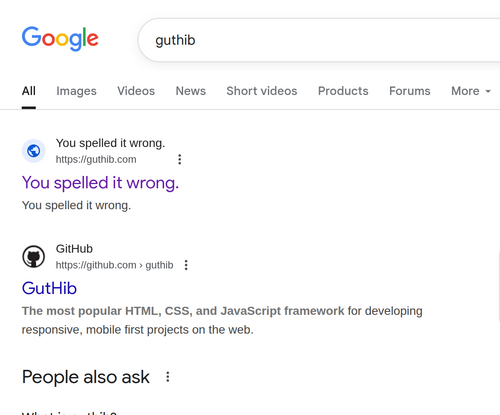

GitHub's biggest debates right now? Whether to shut down AI-generated noise from Copilot—stuff like auto-written issues and code reviews. No clear answers from GitHub yet.

Frustration is piling up. Some devs are replacing the platform altogether, shifting their projects to Codeberg or spinning up self-hosted Forgejo stacks to take back control.

System shift: The more GitHub leans into AI, the more it nudges people out. That old network effect? Starting to crack. |

|

| |

|

| |

|

| |

| Vibe coding has turned senior devs into ‘AI babysitters,’ but they say it’s worth it |

| |

| |

Fastly says 95% of developers spend extra time fixing AI-written code. Senior engineers take the brunt. That overhead has even spawned a new gig: “vibe code cleanup specialist.” (Yes, seriously.)

As teams lean harder on AI tools, reliability and security start to slide—unless someone steps in. The result? A quiet overhaul of the dev pipeline. QA gets heavier. The line between automation and ownership? Blurry at best. |

|

| |

|

| |

|

| |

| AgentHopper: An AI Virus |

| |

| |

In the “Month of AI Bugs,” researchers poked deep and found prompt injection holes bad enough to run arbitrary code on major AI coding tools—GitHub Copilot, Amazon Q, and AWS Kiro all flinched.

They didn’t stop at theory. They built AgentHopper, a proof-of-concept AI virus that leapt between agents via poisoned repos. The trick? Conditional payloads aimed at shared weak spots like self-modifying config access. |

|

| |

|

| |

|

| |

| The LinkedIn Generative AI Application Tech Stack: Extending to Build AI Agents |

| |

| |

LinkedIn tore down its GenAI stack and rebuilt it for scale—with agents, not monoliths. The new setup leans on distributed, gRPC-powered systems. Central skill registry? Check. Message-driven orchestration? Yep. It’s all about pluggable parts that play nice together.

They added sync and async modes for invoking agents, wired in OpenTelemetry for observability that actually tells you things, and embraced open protocols like MCP and A2A to stay friendly with the rest of the ecosystem.

System shift: Think less "giant LLM in a box" and more "team of agents working in sync, speaking a shared language, and running on real infrastructure." |

|

| |

|

| |

|

| |

| GitHub MCP Registry: The fastest way to discover AI tools |

| |

| |

GitHub just rolled out the MCP Registry—a hub for finding Model Context Protocol (MCP) servers without hunting through scattered corners of the internet. No more siloed lists or mystery URLs. It's all in one place now.

The goal? Cleaner access to AI agent tools, plus a path toward self-publishing, thanks to GitHub’s work with the MCP Steering Committee. |

|

| |

|

| |

| 👉 Enjoyed this?Read more news on FAUN.dev/news |