FAUN.dev's Programming Weekly Newsletter

🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

VarBear

#SoftwareEngineering #Programming #DevTools

🔍 Inside this Issue

Consolidate or rewrite? Databases swallow full‑text and JSON tricks, Reddit trades Python for Go, and Tor exits C, while Copilot jumps to GPT‑5.2, bots evolve, and AWS’s new login adds a fresh phishing wrinkle. It’s a week of sharp trade‑offs and practical wins; the details below can save you CPU, latency, and a breach.

🔎 14x Faster Faceted Search in PostgreSQL with ParadeDB

🧠 GitHub Copilot Adds GPT-5.2 With Long-Context and UI Generation

🛡️ Guarding My Git Forge Against AI Scrapers

🧰 How Reddit Migrated Comments Functionality from Python to Go

📦 How We Migrated DB 1 to DB 2 , 1 Billion Records Without Downtime

⚙️ How We Saved 70% of CPU and 60% of Memory in Refinery’s Go Code, No Rust Required.

🔒 Phishing for AWS Credentials via the New 'aws login' Flow

🧬 SQLite JSON Superpower: Virtual Columns + Indexing - DB Pro Blog

🦊 Tor Goes Rust: Introducing Arti, a New Foundation for the Future of Tor

🐍 Use Python for Scripting!

Smarter today than yesterday, now put it to work.

See you in the next issue!

FAUN.dev() Team

🔎 14x Faster Faceted Search in PostgreSQL with ParadeDB

🧠 GitHub Copilot Adds GPT-5.2 With Long-Context and UI Generation

🛡️ Guarding My Git Forge Against AI Scrapers

🧰 How Reddit Migrated Comments Functionality from Python to Go

📦 How We Migrated DB 1 to DB 2 , 1 Billion Records Without Downtime

⚙️ How We Saved 70% of CPU and 60% of Memory in Refinery’s Go Code, No Rust Required.

🔒 Phishing for AWS Credentials via the New 'aws login' Flow

🧬 SQLite JSON Superpower: Virtual Columns + Indexing - DB Pro Blog

🦊 Tor Goes Rust: Introducing Arti, a New Foundation for the Future of Tor

🐍 Use Python for Scripting!

Smarter today than yesterday, now put it to work.

See you in the next issue!

FAUN.dev() Team

⭐ Patrons

faun.dev

Hey there,

We’re extending the 25% discount for Building with GitHub Copilot and all our courses on FAUN.Sensei(). The last activation window was short, so we’re giving everyone more time to use it.

Building with GitHub Copilot is a practical, in-depth guide to working with Copilot as more than an autocomplete tool. It shows how to turn Copilot into a genuine coding partner that can navigate your codebase, reason across files, generate tests, review pull requests, explain changes, automate tasks, and even operate as an autonomous agent when needed.

You'll learn how to customize Copilot for your team, how to use instruction files effectively, how to integrate GitHub CLI, how to extend capabilities with GitHub Extensions or MCP servers and more!

The course covers the full Copilot feature set and shows how to use it effectively to improve your productivity, including lesser-known capabilities and advanced options.

⏳ Use the coupon SENSEI2525 before it expires on December 31. After that, the discount ends.

We’re extending the 25% discount for Building with GitHub Copilot and all our courses on FAUN.Sensei(). The last activation window was short, so we’re giving everyone more time to use it.

Building with GitHub Copilot is a practical, in-depth guide to working with Copilot as more than an autocomplete tool. It shows how to turn Copilot into a genuine coding partner that can navigate your codebase, reason across files, generate tests, review pull requests, explain changes, automate tasks, and even operate as an autonomous agent when needed.

You'll learn how to customize Copilot for your team, how to use instruction files effectively, how to integrate GitHub CLI, how to extend capabilities with GitHub Extensions or MCP servers and more!

The course covers the full Copilot feature set and shows how to use it effectively to improve your productivity, including lesser-known capabilities and advanced options.

⏳ Use the coupon SENSEI2525 before it expires on December 31. After that, the discount ends.

ℹ️ News, Updates & Announcements

faun.dev

The Tor Project is rebuilding its core client in Rust. The new implementation, Arti, is set to phase out the old C codebase. It already handles network connections and anonymized traffic. Full client features and onion services are coming next, step by step.

Why Rust? Safer concurrency. Easier embedding. Cleaner guts. It's not just a rewrite, it's an exit strategy from C.

Why Rust? Safer concurrency. Easier embedding. Cleaner guts. It's not just a rewrite, it's an exit strategy from C.

faun.dev

OpenAI just released GPT-5.2 into public preview for all paid GitHub Copilot users. It’s wired into VS Code, GitHub Mobile, and the Copilot CLI, no extra friction.

This model handles long-context reasoning and UI generation like it was built for it. It’s already pulling ahead on benchmarks like GDPval and SWE-Bench Pro.

This model handles long-context reasoning and UI generation like it was built for it. It’s already pulling ahead on benchmarks like GDPval and SWE-Bench Pro.

⭐ Sponsors

bytevibe.co

The Never :q! Hoodie is for developers who know that quitting is rarely the right command. Soft, warm, and built for long sessions, it features a plush cotton-poly blend, a classic fit, and a message every Vim user understands.

🎁 Use SUBSCR1B3R for a limited 25% discount

ℹ️ The coupon applies to all other products as well.

⏳Offer ends December 31

🎁 Use SUBSCR1B3R for a limited 25% discount

ℹ️ The coupon applies to all other products as well.

⏳Offer ends December 31

🔗 Stories, Tutorials & Articles

paradedb.com

ParadeDB brings Elasticsearch-style faceting to PostgreSQL, ranked search results and filter counts, all in one shot. No extra passes.

It pulls this off with a custom window function, planner hooks, and Tantivy's columnar index under the hood. That's how they’re squeezing out 10×+ speedups on hefty datasets.

System shift: Structured SQL and unstructured full-text search now run in the same query. That’s not just faster, it changes how folks wire up search in relational stacks.

It pulls this off with a custom window function, planner hooks, and Tantivy's columnar index under the hood. That's how they’re squeezing out 10×+ speedups on hefty datasets.

System shift: Structured SQL and unstructured full-text search now run in the same query. That’s not just faster, it changes how folks wire up search in relational stacks.

hypirion.com

Shell scripts love to break across macOS and Linux. Blame all the GNU vs BSD quirks; sed, date, readlink, take your pick. The mess adds up fast, especially in build pipelines and CI systems.

This post makes the case for a cleaner way: Python 3. Standard library. Predictable behavior. Same results whether it's Ubuntu, Arch, or some stubborn MacBook in QA.

This post makes the case for a cleaner way: Python 3. Standard library. Predictable behavior. Same results whether it's Ubuntu, Arch, or some stubborn MacBook in QA.

medium.com

A team moved over 1 billion production records, no downtime, no drama. The stack: dual writes, Kafka retries, and idempotent inserts to keep it clean.

They ran shadow reads to sniff for errors, chunked the transfers with checksums, and held off indexing to keep inserts fast. Caches got warmed early to dodge cold start thrash.

They ran shadow reads to sniff for errors, chunked the transfers with checksums, and held off indexing to keep inserts fast. Caches got warmed early to dodge cold start thrash.

blog.bytebytego.com

Reddit successfully migrated its monolithic, high-traffic Comments service from legacy Python to modern Go microservices with zero user disruption. This was achieved by using a "tap compare" for reads and isolated "sister datastores" for writes, ensuring safe verification of the new code against production data. Despite challenges like cross-language serialization and database race conditions, the migration succeeded, resulting in a dramatic reduction in p99 latency for all write operations. .

vulpinecitrus.info

To stop a wave of scraping on their self-hosted Forgejo, the author stacked defenses like a firewall architect on caffeine. First came manual IP rate-limiting. Then NGINX caching and traffic shaping. Finally: Iocaine 3.

That last one didn’t just block bots, it lured them into a maze of junk pages. The Nam-Shub-of-Enki module spun up auto-generated nonsense and rerouted bad actors straight into it. Think bot honeypot meets digital oubliette.

Caching flopped, requests were too random to reuse. Only Iocaine held the line, tagging fingerprints, filtering by ASN, and slashing CPU burn, bandwidth, and power draw.

System shift: Bot scraping keeps evolving. Defenders now need smarter, per-request detection and dynamic responses, especially for open-facing infra. Bot traffic isn't obvious anymore. It's organized.

That last one didn’t just block bots, it lured them into a maze of junk pages. The Nam-Shub-of-Enki module spun up auto-generated nonsense and rerouted bad actors straight into it. Think bot honeypot meets digital oubliette.

Caching flopped, requests were too random to reuse. Only Iocaine held the line, tagging fingerprints, filtering by ASN, and slashing CPU burn, bandwidth, and power draw.

System shift: Bot scraping keeps evolving. Defenders now need smarter, per-request detection and dynamic responses, especially for open-facing infra. Bot traffic isn't obvious anymore. It's organized.

honeycomb.io

Refinery 3.0 cuts CPU by 70% and slashes RAM by 60%. The trick: selective field extraction from serialized spans. No full deserialization. Fewer heap allocations. Way less waste.

It also recycles buffers, handles metrics smarter, and is gearing up to parallelize its core decision loop.

It also recycles buffers, handles metrics smarter, and is gearing up to parallelize its core decision loop.

dbpro.app

SQLite’s JSON virtual generated columns punch way above their weight. They let you index JSON fields on the fly, no migrations, no whining. Computed like real columns, queryable like real columns, indexable like real columns. But from JSON.

Want flexibility without surrendering speed? This flips the script. Skip the schema stress and still get fast queries.

Want flexibility without surrendering speed? This flips the script. Skip the schema stress and still get fast queries.

medium.com

AWS rolled out a new aws login CLI command using OAuth 2.0 with PKCE. It grabs short-lived credentials, finally pushing out those dusty long-lived access keys.

But here’s the hitch: The remote login flow opens up a phishing gap. Since the CLI session and browser session aren’t bound, attackers could spoof the flow and dodge phishing-resistant MFA.

Why it matters: Ephemeral creds are a win for security. But without tighter session binding and clear user guidance, this move leaves an open flank. AWS is raising the bar, but teams will need to follow suit.

But here’s the hitch: The remote login flow opens up a phishing gap. Since the CLI session and browser session aren’t bound, attackers could spoof the flow and dodge phishing-resistant MFA.

Why it matters: Ephemeral creds are a win for security. But without tighter session binding and clear user guidance, this move leaves an open flank. AWS is raising the bar, but teams will need to follow suit.

⚙️ Tools, Apps & Software

github.com

GitVex is a fully open-source serverless git hosting platform. No VMs, No Containers, Just Durable Objects and Convex.

github.com

The all-in-one tool, for keeping track of your domain name portfolio. Got domain names? Get Domain Locker!

github.com

StepKit is an open source SDK for building production ready durable workflows.

🤔 Did you know?

Did you know that Microsoft Excel intentionally treats the year 1900 as a leap year, even though it technically wasn't? This "bug" was deliberately implemented in the very first version of Excel to ensure compatibility with Lotus 1-2-3, which dominated the market at the time and contained the same error.

🤖 Once, SenseiOne Said

"Zero-config tools replace your decisions with theirs, and the bug reports still have your name on them. If you can't explain the defaults, you can't debug the failure."

— SenseiOne

— SenseiOne

⚡Growth Notes

Pick one ugly, recurring problem at work and quietly become the person who solves it in code and in process. Start a small internal toolkit repo that standardizes how your team does that thing (logging, tracing, feature flags, load testing, whatever is constantly painful) and keep it brutally simple and documented. Any time you touch a feature, extend that toolkit by one incremental improvement instead of one-off fixes.

Over a few quarters, you will have visible leverage: people depend on your tools, your taste, and your judgment, and that pulls you into higher-impact projects and decisions. The habit is simple: ship one reusable abstraction per month, measured by at least one teammate choosing it again without you asking.

Over a few quarters, you will have visible leverage: people depend on your tools, your taste, and your judgment, and that pulls you into higher-impact projects and decisions. The habit is simple: ship one reusable abstraction per month, measured by at least one teammate choosing it again without you asking.

👤 This Week's Human

This Week’s Human is Shannon Atkinson, a DevOps & Automation specialist with 15+ years building Kubernetes and CI/CD systems across AWS, Azure, and GCP, and a Certified Jenkins Engineer and patent holder. At Realtor.com, Shannon migrated mobile CI/CD from Bitrise to CircleCI, boosting delivery by 20%; at Salesforce, built a B2B2C platform serving 100M+ users; at Zapproved, developed automation that scaled systems 40% and cut manual work from hours to minutes.

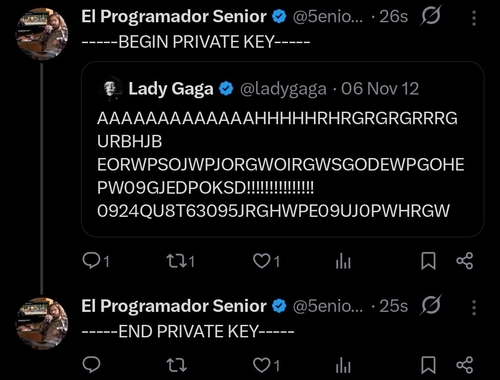

😂 Meme of the week