🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

🔍 Inside this Issue

Power plays and sharp edges: GPT-5 chest-thumping, Anthropic slamming the door on OpenAI, event-driven agents creeping into CI, and a code editor patched after prompt injection got real. Skim the headlines, then dive into the rabbit holes—the details are where the leverage is.

🕵️ Anthropic says OpenAI engineers using Claude Code ahead of GPT-5 launch🚀 GPT-5 is here🕸️ Perplexity is using stealth, undeclared crawlers to evade website no-crawl directives🐞 Cursor AI Code Editor Fixed Flaw Allowing Attackers to Run Commands via Prompt Injection🤖 Event-Driven Agents in Action🧭 A practical guide on how to use the GitHub MCP server📊 Which LLM writes the best analytical SQL?🧪 Forcing LLMs to be evil during training can make them nicer in the long run🏠 Building an AI Home Security System Using .NET, Python, CLIP, Semantic Kernel, Telegram, and Raspberry Pi 4

You’re now dangerously well-briefed—turn it into leverage.

Have a great week!

FAUN.dev Team

🕵️ Anthropic says OpenAI engineers using Claude Code ahead of GPT-5 launch🚀 GPT-5 is here🕸️ Perplexity is using stealth, undeclared crawlers to evade website no-crawl directives🐞 Cursor AI Code Editor Fixed Flaw Allowing Attackers to Run Commands via Prompt Injection🤖 Event-Driven Agents in Action🧭 A practical guide on how to use the GitHub MCP server📊 Which LLM writes the best analytical SQL?🧪 Forcing LLMs to be evil during training can make them nicer in the long run🏠 Building an AI Home Security System Using .NET, Python, CLIP, Semantic Kernel, Telegram, and Raspberry Pi 4

You’re now dangerously well-briefed—turn it into leverage.

Have a great week!

FAUN.dev Team

ℹ️ News, Updates & Announcements

winbuzzer.com

Manus just dropped Wide Research—a swarm of 100+ AI agents, each spun up as a Turing-complete VM. They don’t follow orders. They solve massive tasks in parallel, straight from natural language prompts.

Forget rigid chains of command. These agents don’t play roles—they run jobs. No hierarchies. No brittle workflow maps. Just raw, dynamic compute.

System shift: Think less “one AI tool,” more “tiny cloud of general-purpose minds.” It’s personal-scale orchestration. Distributed horsepower, directed by the user.

Forget rigid chains of command. These agents don’t play roles—they run jobs. No hierarchies. No brittle workflow maps. Just raw, dynamic compute.

System shift: Think less “one AI tool,” more “tiny cloud of general-purpose minds.” It’s personal-scale orchestration. Distributed horsepower, directed by the user.

docker.com

Docker wired up an event-driven AI agent using Mastra and the Docker MCP Gateway to handle tutorial PRs—comment, close, the works. It runs a crew of agents powered by Qwen3 and Gemma3, synced through GitHub webhooks and MCP tools, all spun up with Docker Compose.

System shift: Agentic frameworks are starting to meet real-time triggers. DevOps might never sleep again.

System shift: Agentic frameworks are starting to meet real-time triggers. DevOps might never sleep again.

bleepingcomputer.com

Anthropic just shut the door on OpenAI, yanking access to the Claude Code API after spotting ChatGPT engineers poking around—likely prepping for GPT-5.

Claude Code isn’t just an internal toy. It’s a serious coding co-pilot, used in the wild by devs who want answers without babysitting a model.

Claude Code isn’t just an internal toy. It’s a serious coding co-pilot, used in the wild by devs who want answers without babysitting a model.

aws.amazon.com

Amazon just dropped the DynamoDB MCP data modeling tool—a natural language assistant that turns app specs into DynamoDB schemas without the boilerplate. It plugs into Amazon Q and VS Code, tracks access patterns, estimates costs, and throws in real-time design trade-offs.

thehackernews.com

XM Cyber dropped a practical guide for rolling out Continuous Threat Exposure Management (CTEM) with its platform—geared for those eyeing 2025 readiness. It dives into wiring up real-time exposure visibility, validating actual risk, and tightening up remediation across complex enterprise setups.

Why it matters: CTEM flips the old playbook. No more snapshot audits. It's about always-on, risk-driven workflows that don't wait for a quarterly scan to tell you where you're bleeding.

Why it matters: CTEM flips the old playbook. No more snapshot audits. It's about always-on, risk-driven workflows that don't wait for a quarterly scan to tell you where you're bleeding.

🔗 Stories, Tutorials & Articles

openai.com

GPT-5 tightens reasoning and lands cleaner hits in math, science, finance, and law. It outpaces GPT-4—not just wider, but deeper.

tinybird.co

Tinybird threw 19 top LLMs at a 200M-row GitHub dataset, testing how well they could turn plain English into solid SQL. Most models kept their syntax clean—but when it came to writing SQL that actually ran well and returned the right results, they lagged behind human pros. Messy schemas or tricky prompts? Total tripwire.

jamiemaguire.net

The post details the process of creating an AI home security system using .NET, Python, Semantic Kernel, a Telegram Bot, Raspberry Pi 4, and Open AI. It covers the hardware and software requirements, as well as the steps to install and test the camera module and the PIR sensor. It also includes code snippets for detecting movement with the PIR sensor, capturing photos, and sending alerts to a Telegram bot.

technologyreview.com

Researchers built an automated pipeline to hunt down the neuron patterns behind bad LLM behavior—sycophancy, hallucinations, malice, the usual suspects. Then they trained models to watch for those patterns in real time.

Anthropic didn’t just steer models after training like most. They baked the corrections into the training loop itself. That move made their models tougher—less prone to picking up nasty habits, even from noisy or biased data.

System shift: Editing behavior during training with neuron-level signals could beat the pants off post-hoc steering—and save a lot of compute in the process.

Anthropic didn’t just steer models after training like most. They baked the corrections into the training loop itself. That move made their models tougher—less prone to picking up nasty habits, even from noisy or biased data.

System shift: Editing behavior during training with neuron-level signals could beat the pants off post-hoc steering—and save a lot of compute in the process.

github.blog

GitHub offers a managed MCP endpoint to simplify infrastructure management and streamline AI workflows, enhancing collaboration and code review processes.

blog.cloudflare.com

Perplexity's stealth crawling behavior involves hidden identity changes to bypass website preferences, leading to their delisting and blocking due to incompatible norms.

⚙️ Tools, Apps & Software

github.com

A Model Context Protocol (MCP) server written in GO that provides text completion capabilities using local LLama.cpp models. This server exposes a single MCP tool that accepts text prompts and returns AI-generated completions using locally hosted language models.

github.com

An open-source AI agent that brings the power of Grok directly into your terminal.

🤔 Did you know?

Did you know that when Multi-Instance GPU (MIG) mode is enabled on NVIDIA A100 or H100 GPUs, any form of GPU-to-GPU peer-to-peer (P2P) communication—whether via NVLink or PCIe—is disabled? NCCL then treats each MIG slice as an isolated device, meaning collective operations across slices fall back to host-mediated PCIe transfers rather than high-speed NVLink or NVSwitch; NVIDIA explicitly documents this lack of P2P support with MIG slices. If you require fast intra-GPU collectives for tensor or pipeline parallel workloads, it’s better to use full-GPU configurations rather than rely on MIG partitions.

👤 This Week's Human

This Week’s Human is Andrew Foe, founder building NVIDIA-powered edge datacenters at HyperAI and a 20+ year cloud/AI practitioner. He’s helped hosting partners become cloud providers, led IoDis to NVIDIA Elite Partner status, and ships sustainable GPU infrastructure (A100/H100/L40S). Previously with Dell, HP, Lenovo, and more, he’s worked with teams at Booking.com, Leaseweb, and AMS-IX to turn complex infrastructure into systems that actually run.

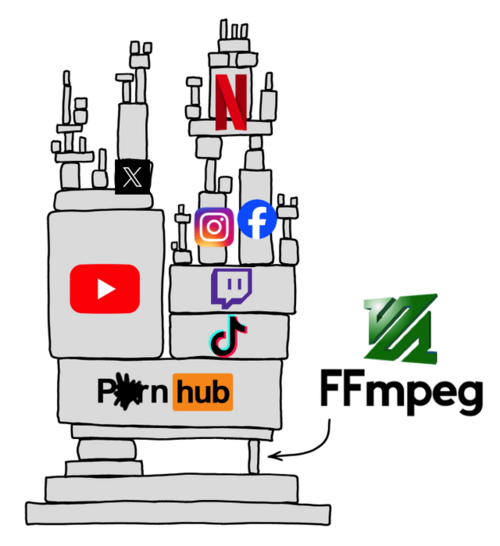

😂 Meme of the week