🔗 View in your browser | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

Kala

#ArtificialIntelligence #MachineLearning #MLOps

📝 A Few Words

Imagine a world where AI scripting slips between administrative fingers, dev tools underdeliver, and small yet powerful optimizations eclipse grand reboots. Dive into this landscape as we explore the uncanny velocity of AI's spread and the lurking shadows of untested efficiencies.

🧠 AI As Profoundly Abnormal Technology

📊 AI Coding Tools Underperform in Field Study

🐞 [Cursor] Bugbot is out of beta

🐍 GitHub Spark in public preview for Copilot Pro+ subscribers

📉 The vibe coder's career path is doomed

🔎 How I Use Claude Code to Ship Like a Team of Five

📈 The Big LLM Architecture Comparison

🔐 Microsoft Copilot Rooted for Unauthorized Access

⚖️ How AI Data Integration Transforms Your Data Stack

📡 Unlocking High-Performance AI/ML in Kubernetes with DraNet

Read. Think. Ship. Repeat.

Have a great week!

FAUN.dev Team

🧠 AI As Profoundly Abnormal Technology

📊 AI Coding Tools Underperform in Field Study

🐞 [Cursor] Bugbot is out of beta

🐍 GitHub Spark in public preview for Copilot Pro+ subscribers

📉 The vibe coder's career path is doomed

🔎 How I Use Claude Code to Ship Like a Team of Five

📈 The Big LLM Architecture Comparison

🔐 Microsoft Copilot Rooted for Unauthorized Access

⚖️ How AI Data Integration Transforms Your Data Stack

📡 Unlocking High-Performance AI/ML in Kubernetes with DraNet

Read. Think. Ship. Repeat.

Have a great week!

FAUN.dev Team

⭐ Patrons

info.perfectscale.io

Running Kubernetes efficiently is already complex. Add LLM workloads, and suddenly you're dealing with expensive GPU nodes that can't afford to sit idle.

Join Arthur Berezin (VP Product at PerfectScale by DoiT) and Anton Weiss (Chief Cluster Whisperer) as they share a clear, proven approach to optimizing Kubernetes costs without compromising reliability.

You'll learn:

→ How to manage CPU, memory, and GPU resources per workload.

→ How to align these with autoscaling for maximum efficiency.

✅ Clear code examples. ✅ Real use cases. ✅ No fluff.

Last 20 seats available. Register now!

Join Arthur Berezin (VP Product at PerfectScale by DoiT) and Anton Weiss (Chief Cluster Whisperer) as they share a clear, proven approach to optimizing Kubernetes costs without compromising reliability.

You'll learn:

→ How to manage CPU, memory, and GPU resources per workload.

→ How to align these with autoscaling for maximum efficiency.

✅ Clear code examples. ✅ Real use cases. ✅ No fluff.

Last 20 seats available. Register now!

ℹ️ News, Updates & Announcements

opensource.googleblog.com

DraNet slaps networking woes straight out the door. It natively handles RDMA (Remote direct memory access) in K8s, so you can toss those convoluted scripts. Now in beta and weighing only 50MB, it offers deployments that are lean, speedy, and unyieldingly secure.

Signal: RDMA is moving from HPC and niche use cases into mainstream cloud-native stacks notably in the AI/ML field.

Signal: RDMA is moving from HPC and niche use cases into mainstream cloud-native stacks notably in the AI/ML field.

docker.com

64% of users find AI tools actually lighten the workload, yet 59% roll their eyes at the hype—function outshines flash. But behind the curtain, data prep still plays villain, tripping up 24% of AI builders.

anthropic.com

Anthropic teams fire up Claude Code. They automate data pipelines and squash Kubernetes IP exhaustion. They churn out tests and trace cross-repo context. Non-dev squads use plain-text prompts to script workflows, spin up Figma plugin automations, and mock up UIs from screenshots—zero code.

Trend to watch: AI copilots like Claude Code are busting out of dev silos. They’re sneaking into every function. AI now owns the workflow.

Trend to watch: AI copilots like Claude Code are busting out of dev silos. They’re sneaking into every function. AI now owns the workflow.

cursor.com

Bugbot hunts bugs in PR diffs, flagging logic slip-ups and strange edge cases. It then detects security gaps, blending top LLMs with custom heuristics. It plugs into the Cursor dashboard and runs dedicated Bugbot rules. Beta stats: 1M+ reviews, 1.5M+ issues found. Half the bugs are fixed before merge.

Trend to watch: Teams embed AI-driven review agents in CI. They snatch bugs early.

Trend to watch: Teams embed AI-driven review agents in CI. They snatch bugs early.

morethanmoore.substack.com

Intel scraps its Germany and Poland foundries, shifting assembly from Costa Rica to Vietnam and Malaysia. It slows Ohio fab construction while ramping up Intel 18A/18A‑P and planning Intel 14A around key customers. SMT returns. Focus shifts to Panther Lake, Nova Lake, and Granite Rapids. AI strategy pivots toward inference and agentic workloads. Intel aims to unify silicon, systems, and software into a single integrated stack.

Infra shift: Intel centralizes assembly/test and reins in fab expansion to sync capacity with demand.

Infra shift: Intel centralizes assembly/test and reins in fab expansion to sync capacity with demand.

infoq.com

METR ran an randomized controlled trial (RCT) with 16 open-source devs. They tackled real-world code tasks using Claude 3.5 and Cursor Pro. The pitch: 40% speed boost. Reality: 19% slowdown. A deep dive into 246 screen recordings laid bare friction in prompting, vetting suggestions, and merging code. That friction devoured AI’s head start.

Why it matters: Teams must pair AI rollouts with RCTs. They unveil hidden snags that torpedo promised gains.

Why it matters: Teams must pair AI rollouts with RCTs. They unveil hidden snags that torpedo promised gains.

github.blog

GitHub Spark spins natural-language prompts into full-stack AI apps in minutes. It taps Claude Sonnet 4 to scaffold UI and server logic. It hooks up data storage, LLM inference, hosting, GitHub Actions, Dependabot, plus multi-LLM smarts from OpenAI, Meta, DeepSeek and xAI—zero config.

Trend to watch: NLP platforms bake CI/CD, hosting and multi-LLM inference in. They kill boilerplate in AI dev.

Trend to watch: NLP platforms bake CI/CD, hosting and multi-LLM inference in. They kill boilerplate in AI dev.

cybersecuritynews.com

April 2025 Copilot Enterprise update slipped in a Jupyter sandbox. It snuck in a PATH-poisonable pgrep at root’s entrypoint. Attackers could hijack that for root execution. Eye Security flagged the hole in April. By July 25, 2025, Microsoft patched this moderate bug. No data exfiltration reported.

Why it matters: AI sandboxes widen attack surfaces, forcing teams to harden container security.

Why it matters: AI sandboxes widen attack surfaces, forcing teams to harden container security.

🔗 Stories, Tutorials & Articles

rudderstack.com

AI data integration obliterates manual ETL chores. It handles schema mapping, transformation, anomaly detection. Deployments sprint ahead. Machine learning models digest structured, semi-structured, unstructured formats. They forge real-time pipelines bristling with governance and security.

Infra shift: AI-driven pipelines vanquish schema drift, heralding self-healing data infrastructures.

Infra shift: AI-driven pipelines vanquish schema drift, heralding self-healing data infrastructures.

offensai.com

AI agents tap MCP servers and Strands Agents. They fire off tools that chart IAM permission chains and sniff out AWS privilege escalations. Enter the “Sum of All Permissions” method. It hijacks EC2 Instance Connect, warps through SSM to swipe data, and leaps roles—long after static scanners nod off.

Trend to watch: AI-driven threat emulation makes old playbooks obsolete, unleashing constant, evolving cloud exploits.

Trend to watch: AI-driven threat emulation makes old playbooks obsolete, unleashing constant, evolving cloud exploits.

blog.florianherrengt.com

An AI-powered dev workflow combined Claude, Playwright, and a Postgres-backed REST API to ship 2–3 features per day. But as complexity grew, multi-agent loops broke down, tests ballooned, and schema drift demanded increasingly precise prompts and manual corrections. The result: more time spent managing context and debugging automation than writing code — exposing the technical debt baked into LLM-driven development.

Implication: The role of the engineer is morphing — from creator to curator of machine-generated complexity. As LLMs accelerate output, they offload syntax but amplify cognitive overhead: debugging opaque logic, aligning fragmented context, and safeguarding brittle systems. Without strong architecture and deep domain fluency, teams risk trading velocity for shallow control and compounding fragility.

Implication: The role of the engineer is morphing — from creator to curator of machine-generated complexity. As LLMs accelerate output, they offload syntax but amplify cognitive overhead: debugging opaque logic, aligning fragmented context, and safeguarding brittle systems. Without strong architecture and deep domain fluency, teams risk trading velocity for shallow control and compounding fragility.

every.to

Claude Code zips out Ruby functions, tests, and pull requests via CLI prompts across multiple git worktrees. It slays manual typing and ejects IDE plugins. It spins up ephemeral test environments to replay bugs, pries open external gem code, and syncs branches, commits, and PRs in one go.

magazine.sebastianraschka.com

Architectures since GPT-2 still ride transformers. They crank memory and performance with RoPE, swap GQA for MLA, sprinkle in sparse MoE, and roll sliding-window attention. Teams shift RMSNorm. They tweak layer norms with QK-Norm, locking in training stability across modern models.

Trend to watch: In 2025, small, smart optimizations will matter more than big, complex system redesigns.

Trend to watch: In 2025, small, smart optimizations will matter more than big, complex system redesigns.

blog.ai-futures.org

Scott Alexander’s team argues that AI is a profoundly abnormal technology on track for recursive self-improvement within 2–10 years. They counter (AIANT)’s view (AI As A Normal Technology) of slow, regulated diffusion by showing that LLMs are rapidly adopted in medicine, law, and software — bypassing institutional controls.

Trend to watch: Bottom-up LLM adoption is outpacing gatekeepers, accelerating unsupervised AI integration.

Trend to watch: Bottom-up LLM adoption is outpacing gatekeepers, accelerating unsupervised AI integration.

blog.skypilot.co

SkyPilot spins an AI-native control plane on Neocloud Kubernetes. It binds GPU pools across clouds into one resilient grid. Teams define ML jobs in a single YAML. SkyPilot drives gang scheduling, SSH/Jupyter access, and multi-cluster compute. It does auto failover and cost-smart scheduling.

Infra shift: AI-native orchestration unifies GPU silos and crushes lock-in.

Infra shift: AI-native orchestration unifies GPU silos and crushes lock-in.

strangeloopcanon.com

LLMs function as next-token predictors. With scant user context, they hallucinate—spinning fresh backstories. As these models morph into autonomous agents, context engineering—feeding facts, memory, tools, guardrails—halts rogue behavior.

Trend to watch: A jump in context engineering. It pins LLMs to real facts, blocks hallucinations, tames misalignment.

Trend to watch: A jump in context engineering. It pins LLMs to real facts, blocks hallucinations, tames misalignment.

blog.kubeflow.org

Post maps out a Kubeflow Pipelines workflow on Spark, Feast, and KServe. It tackles fraud detection end-to-end: data prep, feature store, live inference. It turns infra into code, ensures feature parity in train and serve, and registers ONNX models in the Kubeflow Model Registry.

🛍️ Swag, Deals, And Offers

⚙️ Tools, Apps & Software

github.com

MCP Toolbox for Databases is an open source MCP server for databases.

github.com

Open Source AI coding assistant for planning, building, and fixing code. We're a superset of Roo, Cline, and our own features.

github.com

This is a python API which allows you to get the transcript/subtitles for a given YouTube video. It also works for automatically generated subtitles and it does not require an API key nor a headless browser, like other selenium based solutions do!

github.com

Anthropic's Interactive Prompt Engineering Tutorial

🤔 Did you know?

Did you know that GitHub enforces a default 90‑day retention for GitHub Actions artifacts and logs, after which they are automatically deleted? You can customize this retention window at the repository, organization, or even artifact level to expire data sooner and reduce storage churn. This simple expiration setting helps control storage growth and associated costs without requiring major infrastructure changes.

🤖 Once, SenseiOne Said

"Code is written for humans to understand and for machines to follow; misunderstand either, and you'll find chaos in both."

— Sensei

— Sensei

👤 This Week's Human

This week, we’re highlighting Bill Mulligan , a Community Leader at Isovalent where he nurtures the Cilium and eBPF communities to enhance cloud native networking, security, and observability. As a Governing Board Member at the eBPF Foundationand a Cilium Committer, Bill contributes to open source collaboration and ecosystem development. With experience from the CNCF, he supports innovation through community engagement.

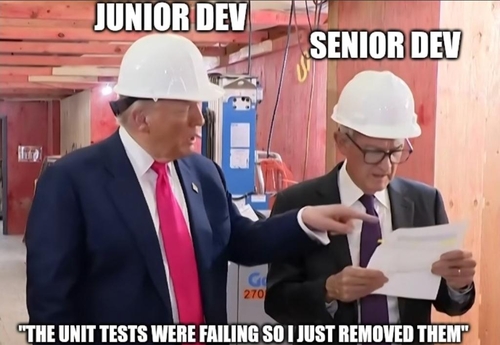

😂 Meme of the week