🔗 View in your browser. | ✍️ Publish on FAUN.dev | 🦄 Become a sponsor

DevOpsLinks

#DevOps #SRE #PlatformEngineering

📝 A Few Words

Remember when AI seemed like a silent partner, silently stepping up code reviews? Now it's a wild card—enhancing speed and code quality, yet rattling stability. Meanwhile, in the world of infrastructure, journeys are becoming personal—whether trekking through hypothetical server hikes or taming Kubernetes' quirks, there's always a lesson worth unearthing.

⛰️ ScreenshotOne Infrastructure: The Four-Day Expedition

⚙️ AI's Impact on Developer Speed vs. Stability

🤖 AI-Powered Detection: Ransomware in the Cloud

🔄 Bash Shell 5.3: Command Substitution Unleashed

🐞 Debugging Pinterest's Search on Kubernetes

🌐 Discord's Trillion-Message Indexing

⚡ ArgoCD & GitOps Made Conversational

✈️ Cloud Storage Bucket Relocation: No More Down Time

🔍 OpenTelemetry Tracing for NGINX

🎢 It’s a GitOps Roller Coaster, Hold On Tight

You've just equipped yourself with pioneering dev tools and sharp insights—deploy wisely!

Have a great week!

FAUN Team

⛰️ ScreenshotOne Infrastructure: The Four-Day Expedition

⚙️ AI's Impact on Developer Speed vs. Stability

🤖 AI-Powered Detection: Ransomware in the Cloud

🔄 Bash Shell 5.3: Command Substitution Unleashed

🐞 Debugging Pinterest's Search on Kubernetes

🌐 Discord's Trillion-Message Indexing

⚡ ArgoCD & GitOps Made Conversational

✈️ Cloud Storage Bucket Relocation: No More Down Time

🔍 OpenTelemetry Tracing for NGINX

🎢 It’s a GitOps Roller Coaster, Hold On Tight

You've just equipped yourself with pioneering dev tools and sharp insights—deploy wisely!

Have a great week!

FAUN Team

⭐ Patrons

info.perfectscale.io

This practical guide breaks down the most effective autoscaling tools and techniques, helping you choose the right solutions for your environment.

No more manually managing clusters, struggling with traffic spikes, or wasting resources on over-provisioned systems!

Get your free copy now!

No more manually managing clusters, struggling with traffic spikes, or wasting resources on over-provisioned systems!

Get your free copy now!

ℹ️ News, Updates & Announcements

pulumi.com

Pulumi just leveled up. It now runs Terraform modules straight up. This means all that slick Pulumi magic paired with the Terraform groundwork you've already laid. Drop in a module, and Pulumi takes over execution and state management. Consider it your bridge to full Pulumi bliss.

octopus.com

52% of teams believe they're ace at cloning apps from Git. High-performers? 70% of them share in this delusion. Yet, lurking infrastructure wrinkles often deflate their grand plans. GitOps, that wild ride, inspires confidence. It dips, then soars. But just when enthusiasts think they're cruising, they slam into the brick wall of "partially recreatable" setups. Blame missed dependencies and invisible infrastructure for that headache.

aws.amazon.com

ArgoCD MCP Server teams up with Amazon Q CLI to shake up Kubernetes with natural language controls. Finally, GitOps that even the non-tech crowd can handle. Kiss those DevOps roadblocks goodbye. No more brain strain from Kubernetes. Now, plain language syncs apps, reveals resource trees, and checks health statuses.

cloud.google.com

GKE Inference Gateway flips LLM serving on its head. It’s all about that GPU-aware smart routing. By juggling the KV Cache in real time, it amps up throughput and slices latency like a hot knife through butter.

diginomica.com

Open-source devs got stuck, wasting 19% more time on tasks thanks to AI tools—oppose the hype and vendor bluster. Yet, a baffling 69% clung to AI, suggesting some sneaky perks lurk beneath the surface.

cloud.google.com

Google's Cloud Storage bucket relocation makes data moves a breeze. Downtime? Forget about it. Your metadata and storage class stay intact, so you can focus on optimizing costs instead of stressing over logistics.

hashicorp.com

AI adoption edges code quality up by 3.4% and speeds up reviews by 3.1%, but beware—a 7.2% nosedive in delivery stability rears ugly security holes. Mask AI’s risky behavior with a fortress-like infrastructure, a central vault for secrets, and a transparency upgrade to reclaim stability and nail compliance.

infoq.com

Wix has slipped probabilistic AI into the mix in CI/CD, and it doesn't clutter the works. This AI chews through build logs, shaving off hours from developer workloads. Migrating 100 modules took three months? Not anymore. They've sliced it to a mere 24-48 hours by marrying AI insights with their sharp Model Context Protocol. Efficiency just got a little smarter.

linuxiac.com

Bash 5.3 chops fork overhead by running substitutions smack in the current shell. A godsend for those sweating tight loops and embedded systems. Plus, feast on the new GLOBORDER variable—it hands you the reins for pathname sorting precision. And to sweeten the deal, Readline 8.3 sprinkles in case-insensitive searching. Time to flex your scripting muscles.

cloud.google.com

Meet the GKE Inference Gateway—a swaggering rebel changing the way you deploy LLMs. It waves goodbye to basic load balancers, opting instead for AI-savvy routing. What does it do best? Turbocharge your throughput with nimble KV Cache management. Throw in some NVIDIA L4 GPUs and Google's model artistry, and scaling those gnarly generative AI workloads becomes a breeze. No bottleneck sweating necessary.

🔗 Stories, Tutorials & Articles

last9.io

Wiring up NGINX with OpenTelemetry shines a spotlight on your request paths. Logs, traces, metrics—no more fumbling in the dark. Dive into crystal-clear traces for every single request. Spot API or database slowdowns? Connect the dots in a snap. Thank the OpenTelemetry Collector for pulling strings like a virtuoso.

medium.com

Migrating Pinterest's search infrastructure to Kubernetes—toasty, right? But it tripped over a rare hiccup: sluggish 5-second latencies. The culprit? cAdvisor, overzealously spying on memory like a helicopter parent. Flicking off WSS? Problem evaporated.

daryllswer.com

IPTTTH in India serves up enterprise-grade connectivity minus the ISP circus. It's like bypassing the bouncer to get inside the exclusive club. Costs? A slippery beast to tame. And those routing snafus? An obstacle course for the faint of heart. Yet, for networking aficionados, it's a ride you won't want to miss. Tech allure meets Layer 8 migraines. Hang on tight.

faranheit.medium.com

GitHub's giving passwords the boot for HTTPS logins. Say hello to public-key SSH or a Personal Access Token. So, load up those SSH keys—or hit the road.

forbes.com

Platform engineering isn't just another flashy tech term. It's the backbone of reliability and speed in workflows. But the "golden path" it maps can swiftly morph into a chaotic Wild West of unruly, bespoke processes if you're not careful. Enter the DevEx team as the cavalry, bringing order with shared tools and pipelines. Still, without a solid game plan—like weaving in external integrations—devs might still scramble for makeshift fixes. Chaos: 1, Order: 0.

dzone.com

Server-driven UI (SDUI) shifts UI control to the server, allowing for instant, dynamic updates without app releases. JSON payloads define components, improving agility but requiring client-side rendering adjustments. Complex UI changes may still need app updates due to missing client-side components.

dzone.com

Cloud platforms face increasing ransomware and malware threats, leading to a shift towards AI and ML for advanced detection. Supervised models excel at known threats, while unsupervised methods detect novel attacks but generate more false positives. Deep learning is great for complex patterns but lacks interpretability. Reinforcement learning offers adaptive responses but requires careful management. Challenges include false positives, adversarial attacks, privacy, and concept drift. Innovations like Explainable AI, Federated Learning, Hybrid Models, and Edge AI are crucial for enhancing cloud security with AI-powered detection systems.

screenshotone.com

Misleading monitor alerts: Turns out, the villain was example.com blocking those pesky automated requests. No real service drama here. Just a wake-up call to tame those testing environments!

linkedin.com

Lift-and-shift plays like a hit song on the AM radio—it’s a decent tune, but lacks soul. Cloud-native? Now that's a rock concert, shaking the ground with power. Redefine apps, don't just shuffle the old stuff upstairs.

spacelift.io

Ansible's file module shakes up file and directory management on remote systems, letting you tweak ownership and permissions like a pro. Unlike the copy module, it zeroes in solely on file attributes, keeping it idempotent and streamlining automation across your whole fleet.

🎦 Videos, Talks & Presentations

youtube.com

Learn how Discord managed to index billions of messages and explore the challenges to their initial architectural decisions as the system scale to trillions of messages and what they did to address these issues.

⚙️ Tools, Apps & Software

github.com

What are the principles we can use to build LLM-powered software that is actually good enough to put in the hands of production customers?

🤔 Did you know?

Did you know that Slack built Flannel, a geo-distributed edge cache and query engine, to optimize session bootstrapping and real-time messaging at scale? Flannel sits between WebSocket connections and backend services, maintaining in-memory workspace snapshots, serving lazy-loaded data on demand, and preemptively enriching on message fan‑outs—helping Slack handle millions of connections and queries per second without dropping the user experience.

🤖 Once, SenseiOne Said

"Automation builds the tools, but it's the untamed edge cases that forge the true craftsman."

— Sensei

— Sensei

👤 This Week's Human

Spotlight on Dorian Lazzari , the mind behind Cubish and its bold plan to grid the Earth into 5.1 trillion geolocated cubes. As CEO, Dorian shapes a future where digital spaces lock into real-world locations through Cube Domains. His push toward a Spatial Web brings a world where places and data fuse. Known for sharp problem-solving and team-building grit, Dorian steered Cubish from stealth mode to a patent-pending stage. The result? A multi-layered digital shell, ready to snap into tomorrow’s immersive tech.

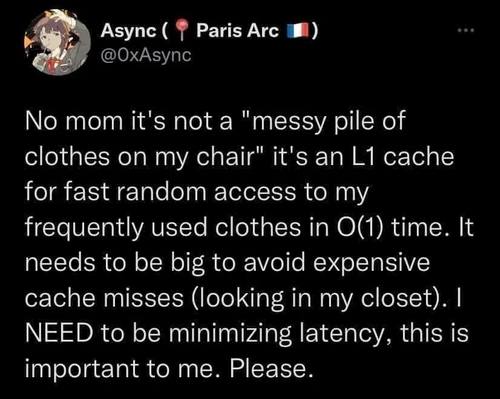

😂 Meme of the week