| |

| 🔗 Stories, Tutorials & Articles |

| |

|

| |

| How AI data integration transforms your data stack |

| |

| |

AI data integration obliterates manual ETL chores. It handles schema mapping, transformation, anomaly detection. Deployments sprint ahead. Machine learning models digest structured, semi-structured, unstructured formats. They forge real-time pipelines bristling with governance and security.

Infra shift: AI-driven pipelines vanquish schema drift, heralding self-healing data infrastructures. |

|

| |

|

| |

|

| |

| The Future of Threat Emulation: Building AI Agents that Hunt Like Cloud Adversaries |

| |

| |

AI agents tap MCP servers and Strands Agents. They fire off tools that chart IAM permission chains and sniff out AWS privilege escalations. Enter the “Sum of All Permissions” method. It hijacks EC2 Instance Connect, warps through SSM to swipe data, and leaps roles—long after static scanners nod off.

Trend to watch: AI-driven threat emulation makes old playbooks obsolete, unleashing constant, evolving cloud exploits. |

|

| |

|

| |

|

| |

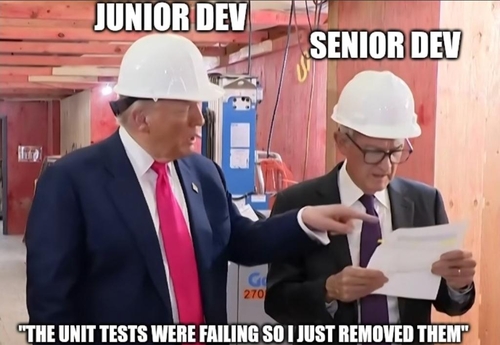

| The vibe coder's career path is doomed |

| |

| |

An AI-powered dev workflow combined Claude, Playwright, and a Postgres-backed REST API to ship 2–3 features per day. But as complexity grew, multi-agent loops broke down, tests ballooned, and schema drift demanded increasingly precise prompts and manual corrections. The result: more time spent managing context and debugging automation than writing code — exposing the technical debt baked into LLM-driven development.

Implication: The role of the engineer is morphing — from creator to curator of machine-generated complexity. As LLMs accelerate output, they offload syntax but amplify cognitive overhead: debugging opaque logic, aligning fragmented context, and safeguarding brittle systems. Without strong architecture and deep domain fluency, teams risk trading velocity for shallow control and compounding fragility. |

|

| |

|

| |

|

| |

| How I Use Claude Code to Ship Like a Team of Five |

| |

| |

| Claude Code zips out Ruby functions, tests, and pull requests via CLI prompts across multiple git worktrees. It slays manual typing and ejects IDE plugins. It spins up ephemeral test environments to replay bugs, pries open external gem code, and syncs branches, commits, and PRs in one go. |

|

| |

|

| |

|

| |

| The Big LLM Architecture Comparison |

| |

| |

Architectures since GPT-2 still ride transformers. They crank memory and performance with RoPE, swap GQA for MLA, sprinkle in sparse MoE, and roll sliding-window attention. Teams shift RMSNorm. They tweak layer norms with QK-Norm, locking in training stability across modern models.

Trend to watch: In 2025, small, smart optimizations will matter more than big, complex system redesigns. |

|

| |

|

| |

|

| |

| AI As Profoundly Abnormal Technology |

| |

| |

Scott Alexander’s team argues that AI is a profoundly abnormal technology on track for recursive self-improvement within 2–10 years. They counter (AIANT)’s view (AI As A Normal Technology) of slow, regulated diffusion by showing that LLMs are rapidly adopted in medicine, law, and software — bypassing institutional controls.

Trend to watch: Bottom-up LLM adoption is outpacing gatekeepers, accelerating unsupervised AI integration. |

|

| |

|

| |

|

| |

| The Evolution of AI Job Orchestration: The AI-Native Control Plane & Orchestration that Finally Works for ML |

| |

| |

SkyPilot spins an AI-native control plane on Neocloud Kubernetes. It binds GPU pools across clouds into one resilient grid. Teams define ML jobs in a single YAML. SkyPilot drives gang scheduling, SSH/Jupyter access, and multi-cluster compute. It does auto failover and cost-smart scheduling.

Infra shift: AI-native orchestration unifies GPU silos and crushes lock-in. |

|

| |

|

| |

|

| |

| Seeing like an LLM |

| |

| |

LLMs function as next-token predictors. With scant user context, they hallucinate—spinning fresh backstories. As these models morph into autonomous agents, context engineering—feeding facts, memory, tools, guardrails—halts rogue behavior.

Trend to watch: A jump in context engineering. It pins LLMs to real facts, blocks hallucinations, tames misalignment. |

|

| |

|

| |

|

| |

| From Raw Data to Model Serving: A Blueprint for the AI/ML Lifecycle with |

| |

| |

| Post maps out a Kubeflow Pipelines workflow on Spark, Feast, and KServe. It tackles fraud detection end-to-end: data prep, feature store, live inference. It turns infra into code, ensures feature parity in train and serve, and registers ONNX models in the Kubeflow Model Registry. |

|

| |

|

| |

👉 Got something to share? Create your FAUN Page and start publishing your blog posts, tools, and updates. Grow your audience, and get discovered by the developer community. |