| |

| 🔗 Stories, Tutorials & Articles |

| |

|

| |

| GPT-5 is here |

| |

| |

| GPT-5 tightens reasoning and lands cleaner hits in math, science, finance, and law. It outpaces GPT-4—not just wider, but deeper. |

|

| |

|

| |

|

| |

| Which LLM writes the best analytical SQL? |

| |

| |

| Tinybird threw 19 top LLMs at a 200M-row GitHub dataset, testing how well they could turn plain English into solid SQL. Most models kept their syntax clean—but when it came to writing SQL that actually ran well and returned the right results, they lagged behind human pros. Messy schemas or tricky prompts? Total tripwire. |

|

| |

|

| |

|

| |

| Building an AI Home Security System Using .NET, Python, CLIP, Semantic Kernel, Telegram, and Raspberry Pi 4 ♻️ |

| |

| |

| The post details the process of creating an AI home security system using .NET, Python, Semantic Kernel, a Telegram Bot, Raspberry Pi 4, and Open AI. It covers the hardware and software requirements, as well as the steps to install and test the camera module and the PIR sensor. It also includes code snippets for detecting movement with the PIR sensor, capturing photos, and sending alerts to a Telegram bot. |

|

| |

|

| |

|

| |

| Forcing LLMs to be evil during training can make them nicer in the long run |

| |

| |

Researchers built an automated pipeline to hunt down the neuron patterns behind bad LLM behavior—sycophancy, hallucinations, malice, the usual suspects. Then they trained models to watch for those patterns in real time.

Anthropic didn’t just steer models after training like most. They baked the corrections into the training loop itself. That move made their models tougher—less prone to picking up nasty habits, even from noisy or biased data.

System shift: Editing behavior during training with neuron-level signals could beat the pants off post-hoc steering—and save a lot of compute in the process. |

|

| |

|

| |

|

| |

| A practical guide on how to use the GitHub MCP server |

| |

| |

| GitHub offers a managed MCP endpoint to simplify infrastructure management and streamline AI workflows, enhancing collaboration and code review processes. |

|

| |

|

| |

|

| |

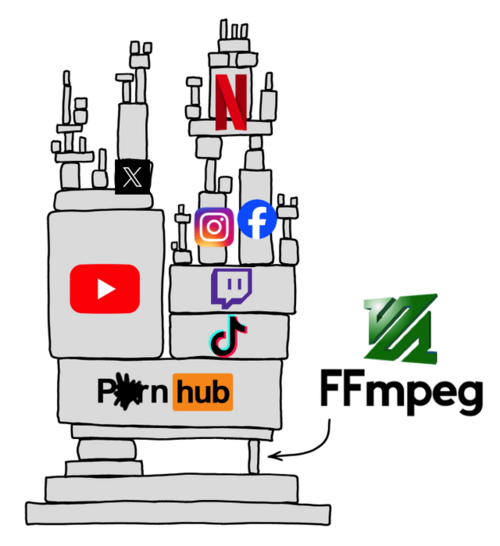

| Perplexity is using stealth, undeclared crawlers to evade website no-crawl directives |

| |

| |

| Perplexity's stealth crawling behavior involves hidden identity changes to bypass website preferences, leading to their delisting and blocking due to incompatible norms. |

|

| |

|

| |

👉 Got something to share? Create your FAUN Page and start publishing your blog posts, tools, and updates. Grow your audience, and get discovered by the developer community. |